Temporality Transfer

How to Extract The Fingerprint of A Film?

The project attempts to extract the fingerprint of a film, in our case, its temporal qualities, and transfer that into other media.

To compare, the left part of the video below is our initial input, a 1-min clip from the documentary ‘Peter Zumthor: the Thermae of Stone’,

the right part is the final output, a unity architecture visualization. One can feel the resemblance between the two.

The temporal and cinematographic qualities of the film is revived in a new context.

Work at Harvard GSD | 2018 Teammate: Mengfei Wang Advisor: Panagiotis Michalatos Skills: Computational Design, Unity C# Development

Manifesto

“As humans, we are innate temporal creatures.

We examine the vicissitudes in our surrounding and in ourselves incessantly.

Yet temporality is underrepresented in design.

TEMPORALITY TRANSFER is a step towards a more temporality-rich design world.”

Brief

Our project aims to understand the temporal quality of a film and transfer that into other media.

For each film, its temporality is examined at different levels of resolution---from the entire film, to a selected group of shots, to a single shot.

Then we try to reproduce the same temporality in a new context. In this case, architectural visualization is chosen as our application.

Initial Input

We chose architectural documentary as initial input for the system to “learn” from

since we hope eventually, our algorithm can be applied to architecture visualization.

Also, compared to other genres (e.g. action, horror movies), the camera language of architectural documentary is easier to digitize.

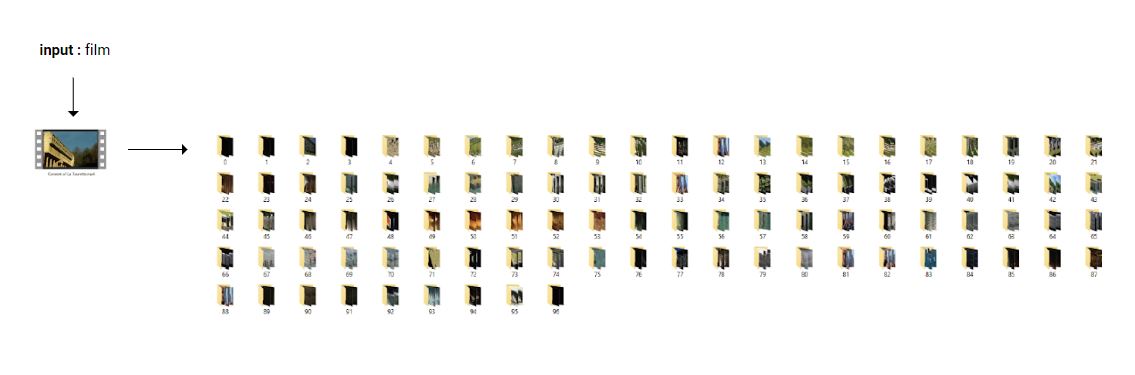

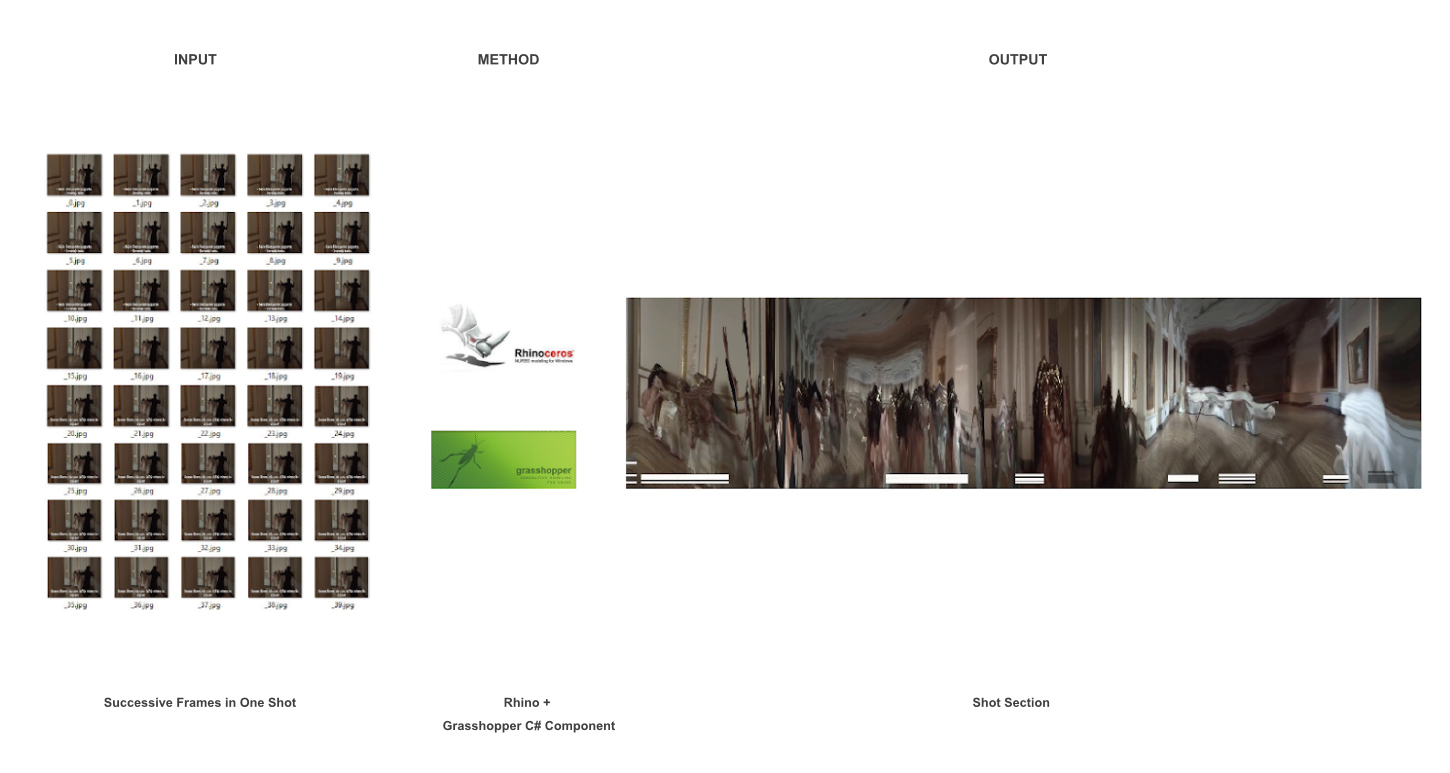

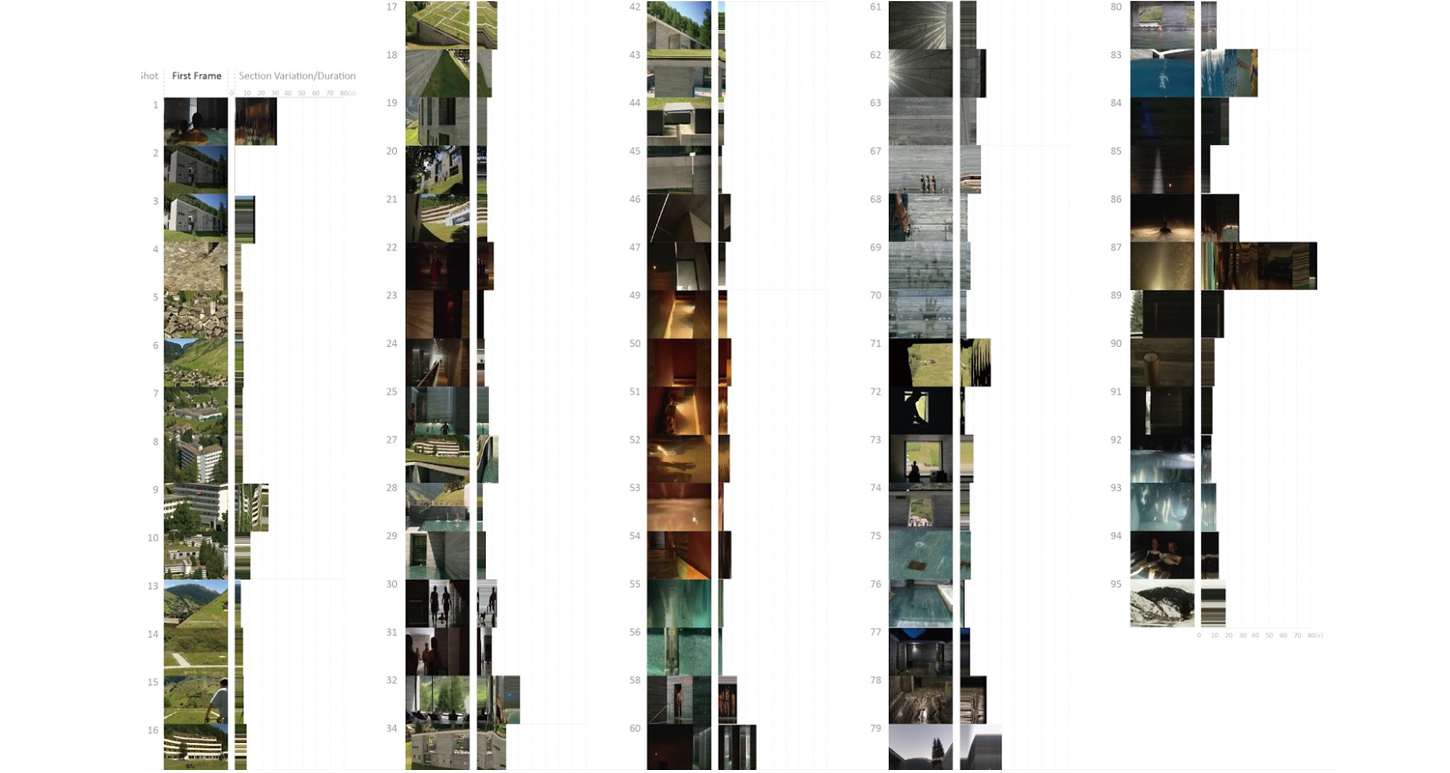

1. Cutting The Shots

To understand the overall film structure, the first step is to identity the shot-cuts in a film. We were able to cut the film into

97 disparate shot groups and auto-save the screenshots within one continuous shot into each folder.

Then we classified the 97 shots into 4 categories according to the signature screenshot:

shots of the architecture itself, shots of the architect, shots of the architectural model, and other shots.

Among those, we are only interested in shots of the architecture itself.

Putting the above shot classification result along the timeline of the film, we got a summary of the overall film structure.

2. Selected Shot Group Study

As stated above, we’re only intrigued by shots about the architecture itself. Other shot groups were discarded.

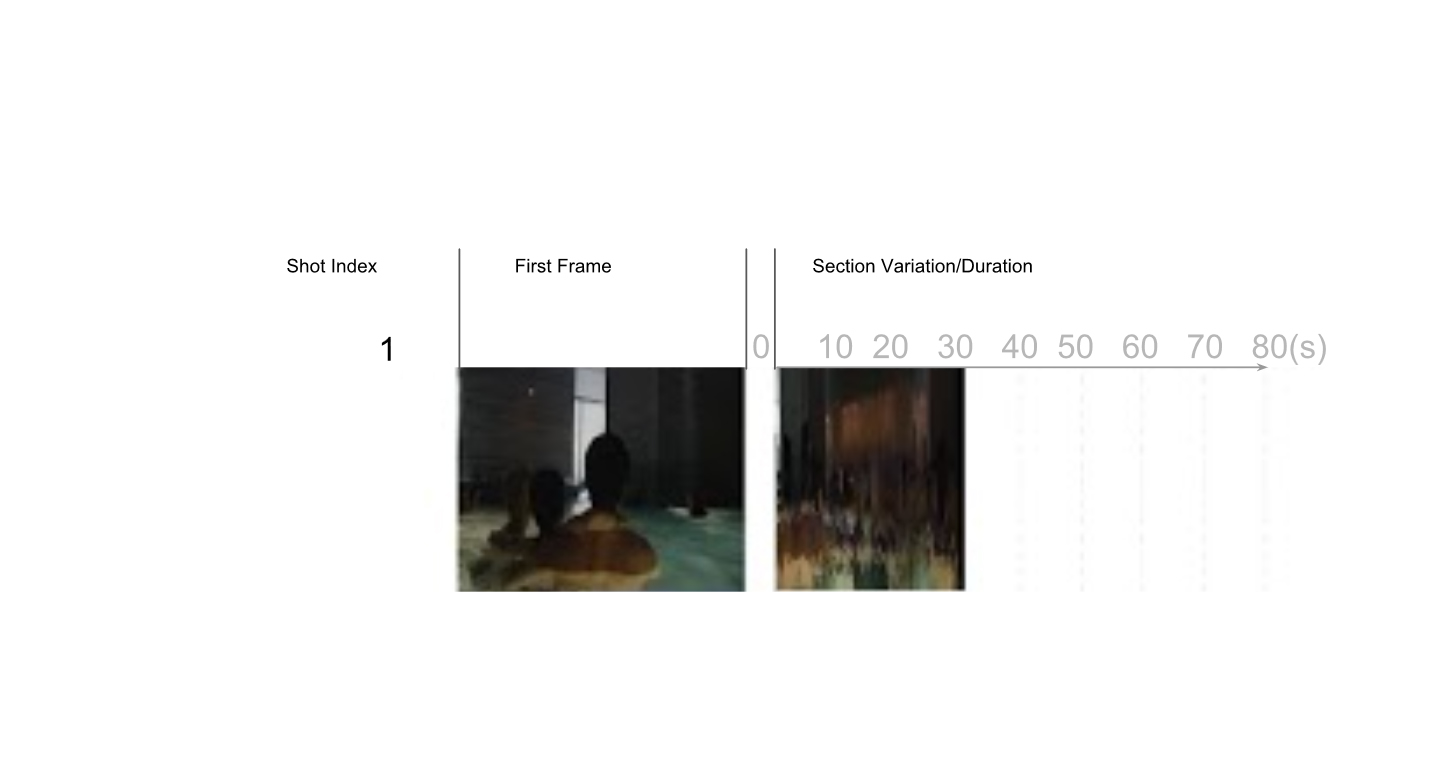

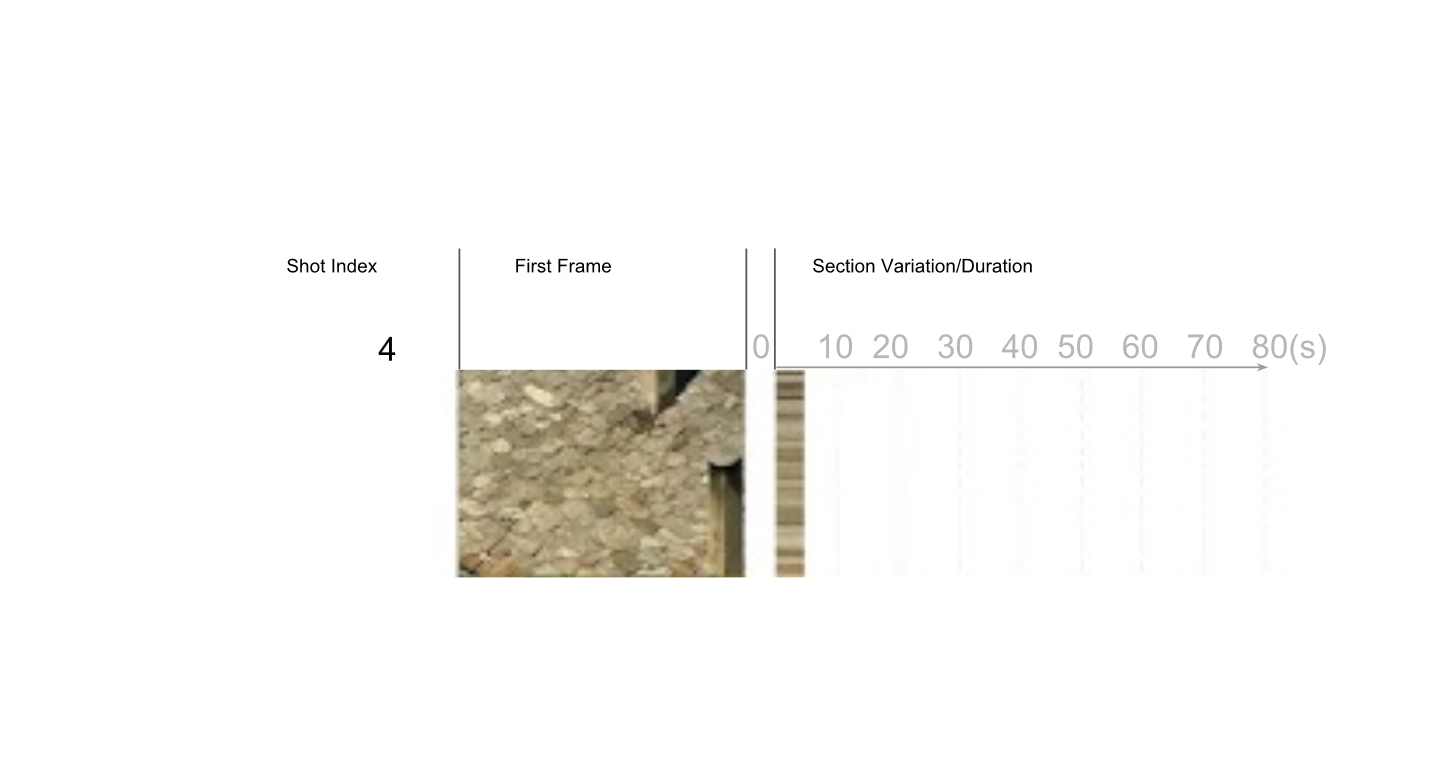

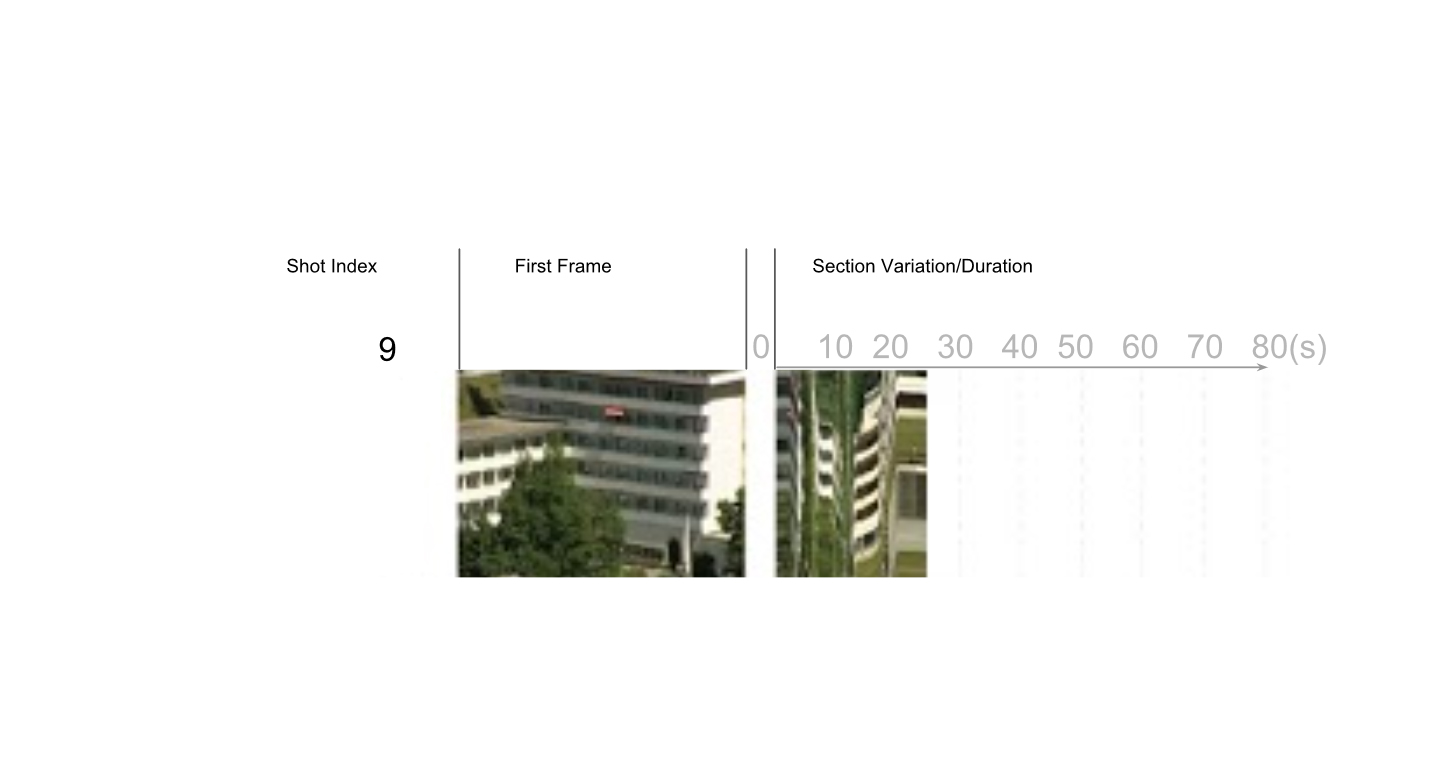

Static diagram was produced for each shot to study its basic visual properties (light/color..) and their variation through time.

It can be seen that some shots are totally still, some have camera movement, while others have both camera and human motion.

Since it is hard for our software to analyze human motion, we decided to narrow down on the static shots as well as those

that only have camera movement in them.

That led us to choose shot 16-19, in that this shot group had a very tempting mix of the two types of shots we want.

3. Single Shot Analysis & Re-application

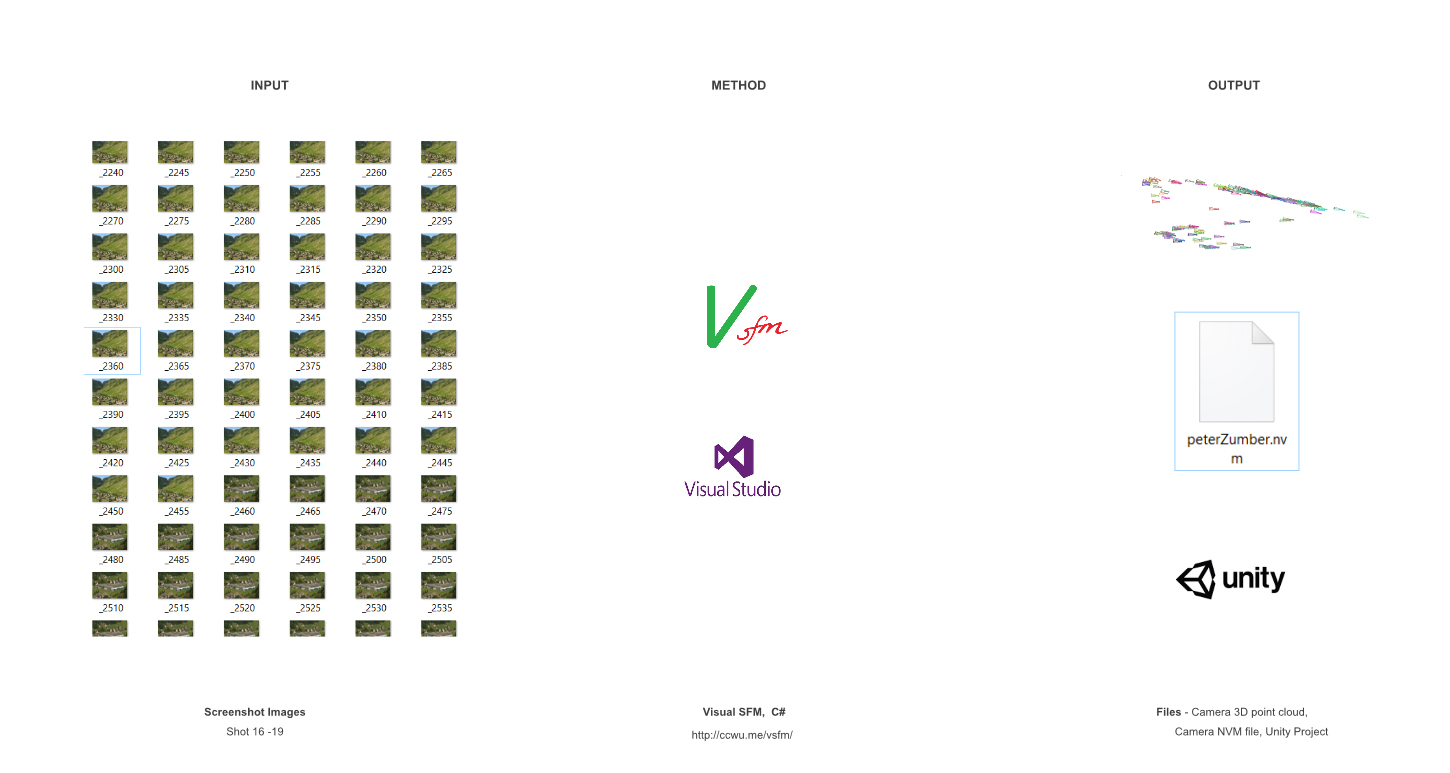

So we went on to analyze the temporality of shot 16-19, aiming to convert it into a digital format which can be reused in other contexts.

There are many dimensions of temporality in a film,

(e.g. how the lightness/color-tone shifts, how the sound changes, or how the camera moves during a period of time.)

Considering the tools we have as well as the future application we want, camera movement became our target subject for temporality.

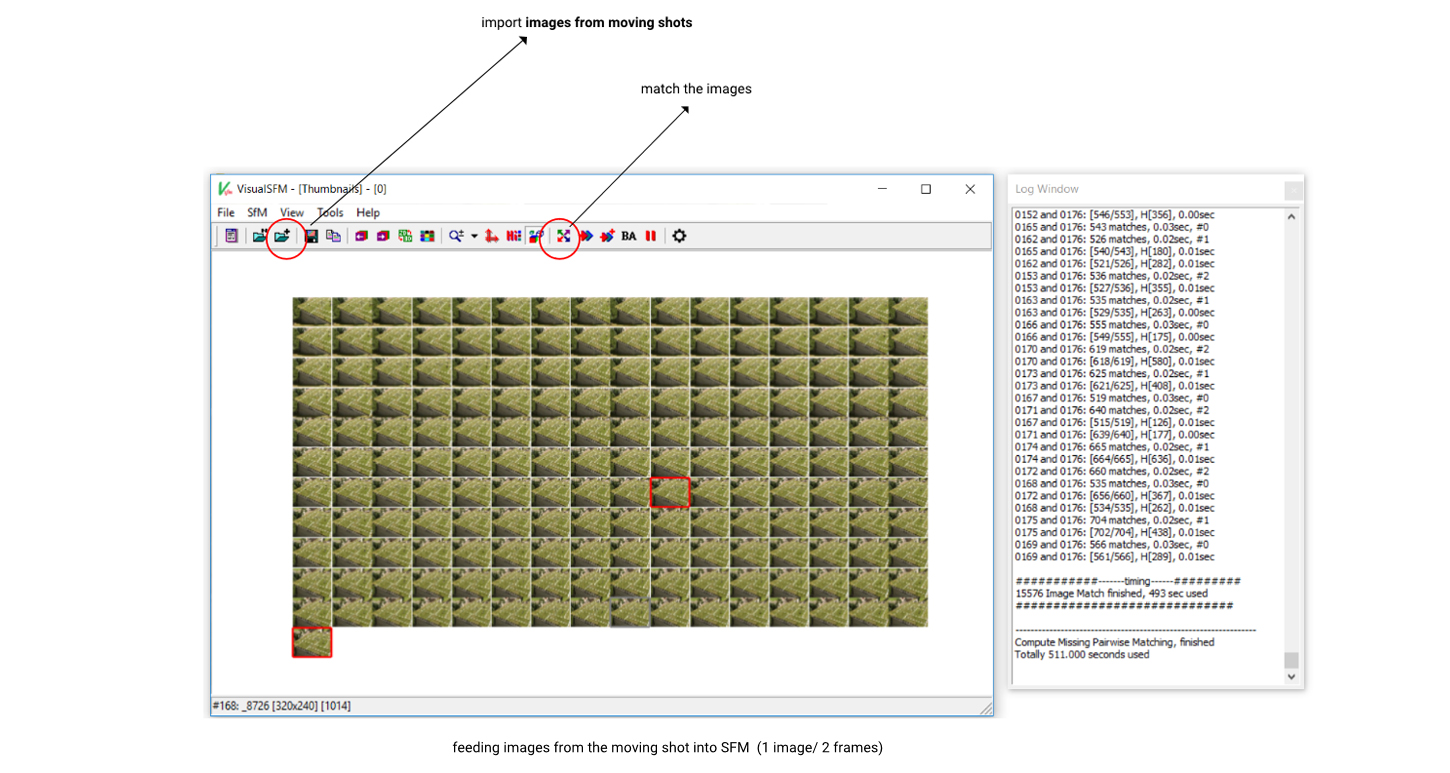

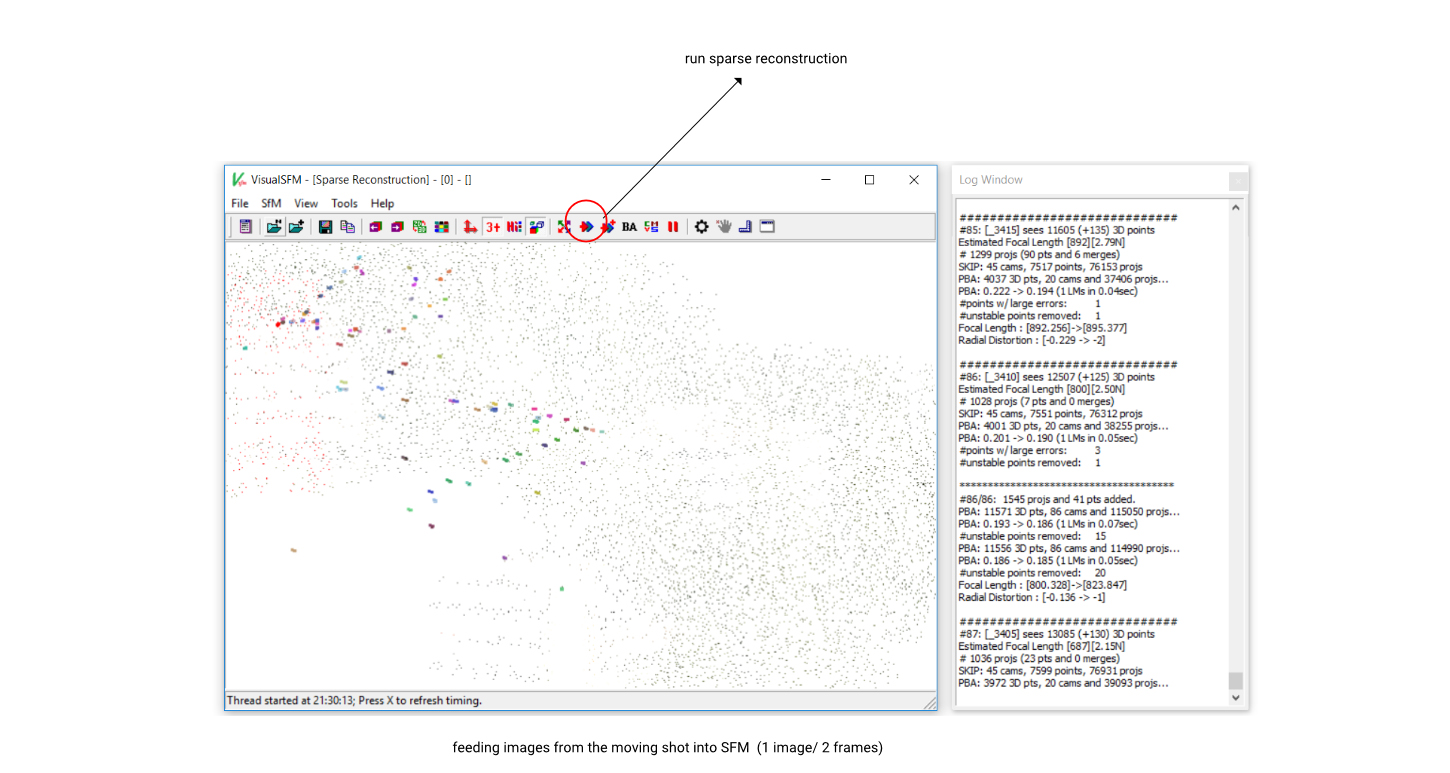

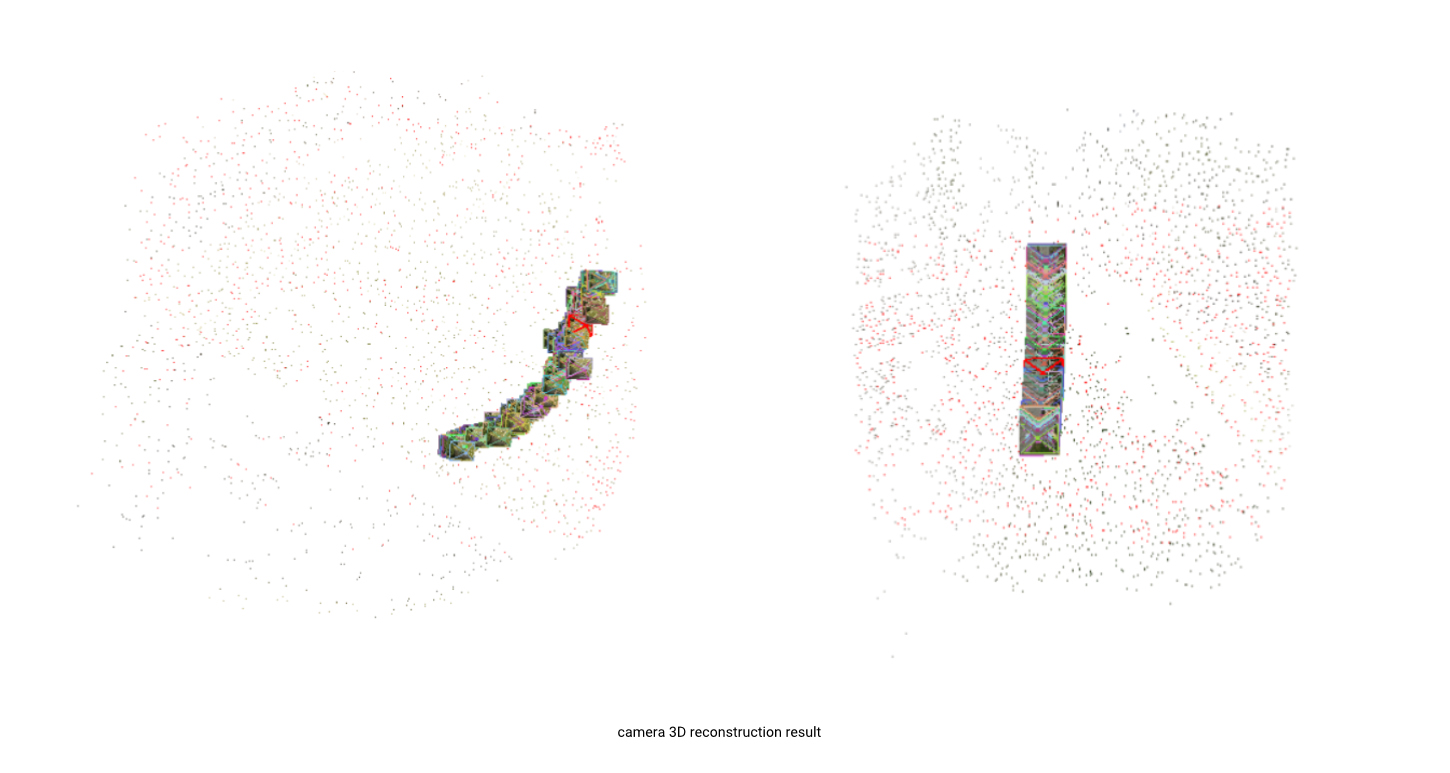

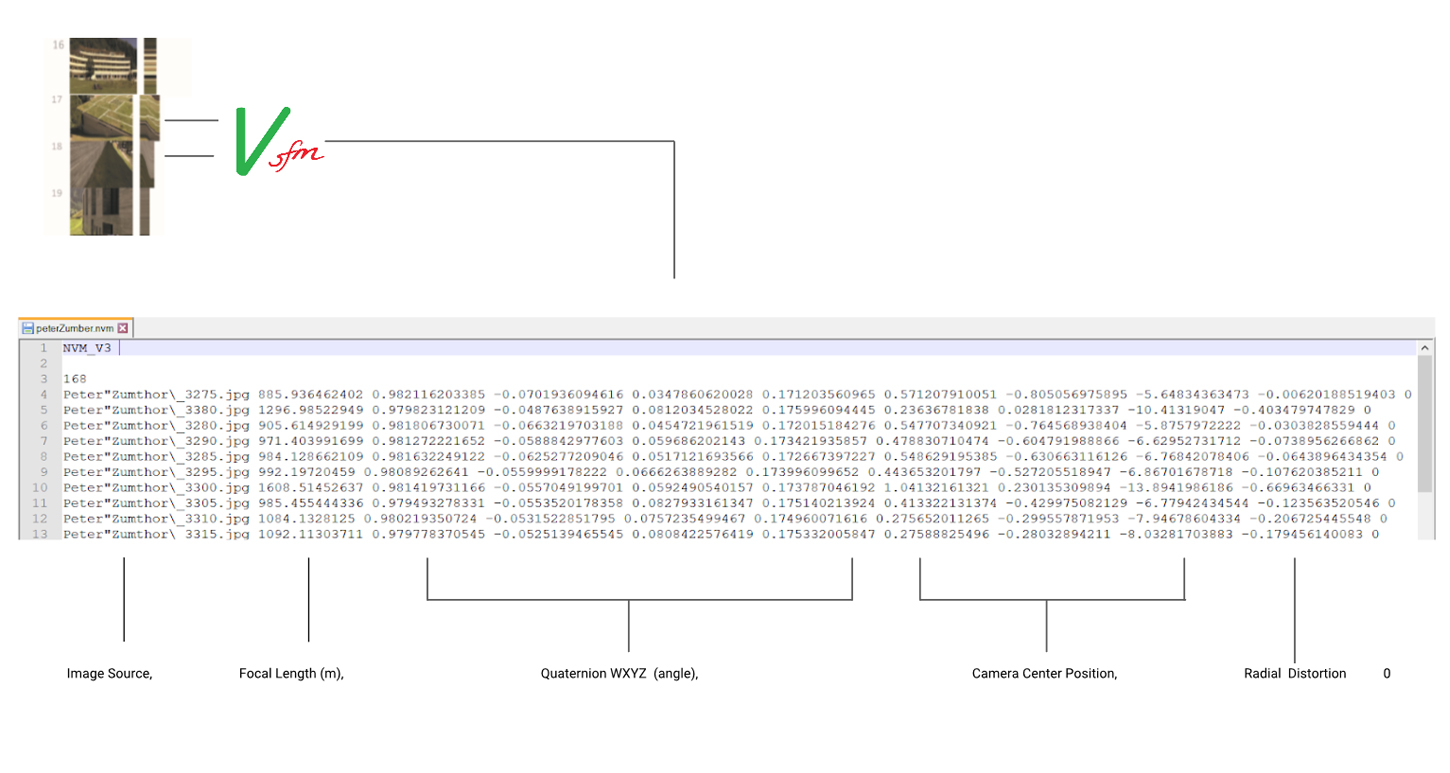

Structure From Motion (SFM) was utilized to decipher the camera information in each shot. From a continuous group of frames,

the software was able to interpret the camera position, angle, and focal length in each viewport, then output the 3D camera point clouds.

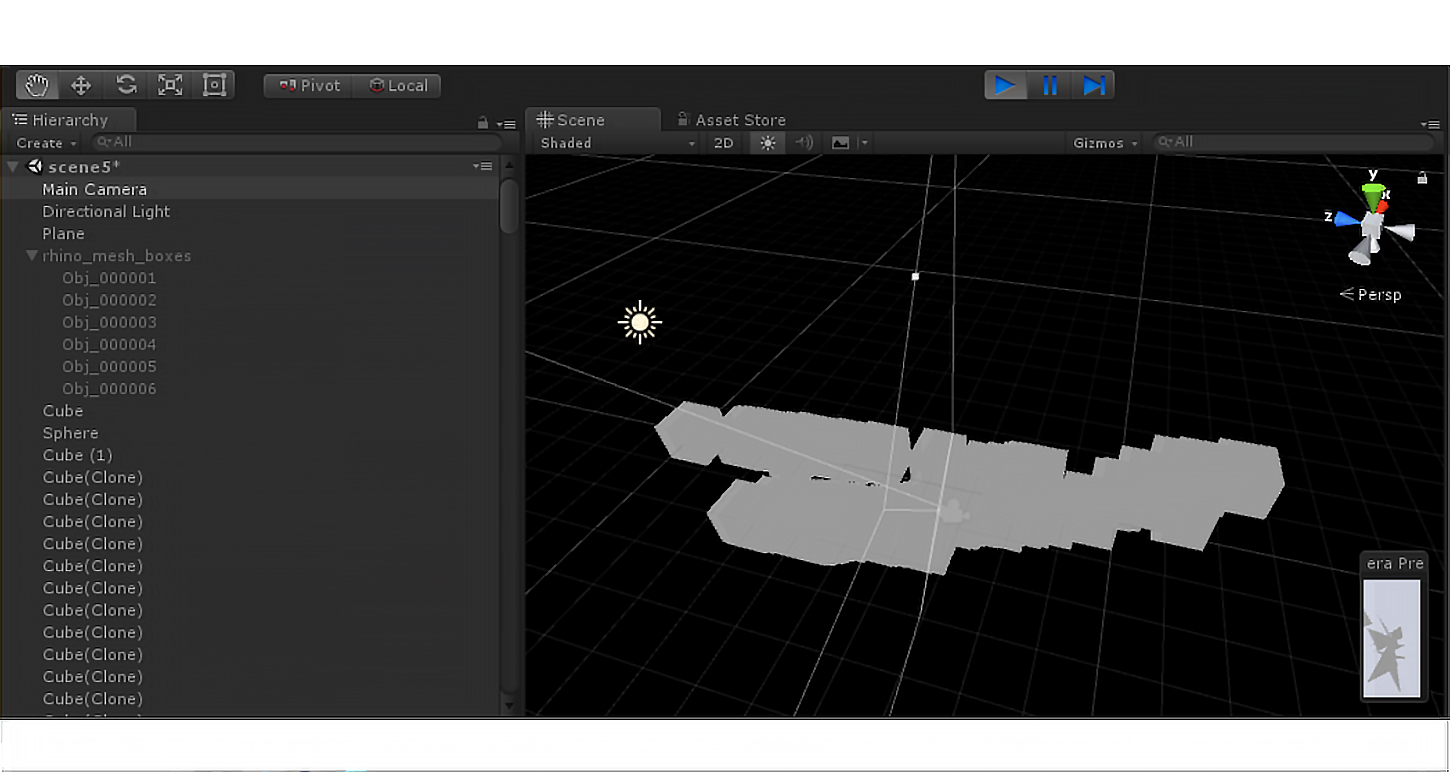

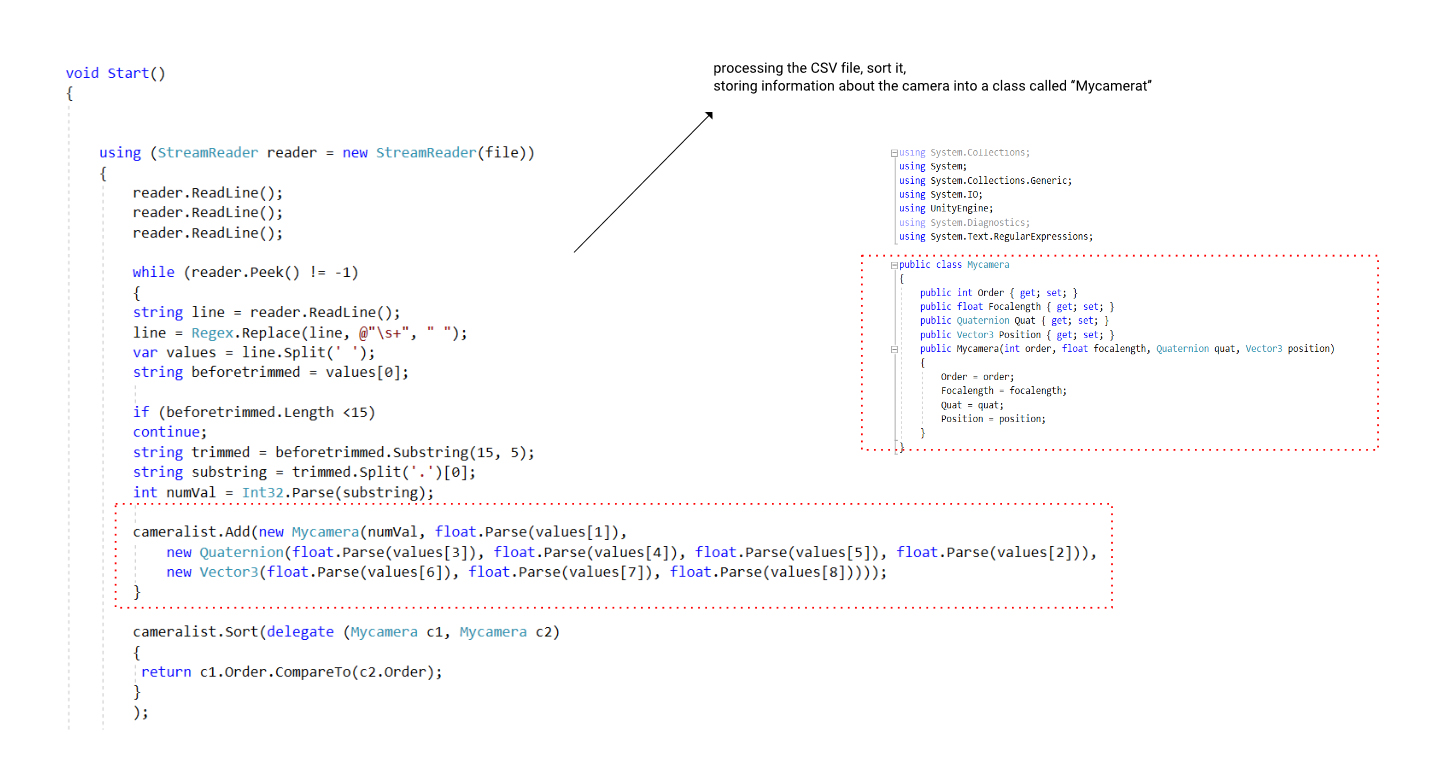

Then we reconstructed the camera path in Unity using the csv(nvm) files.

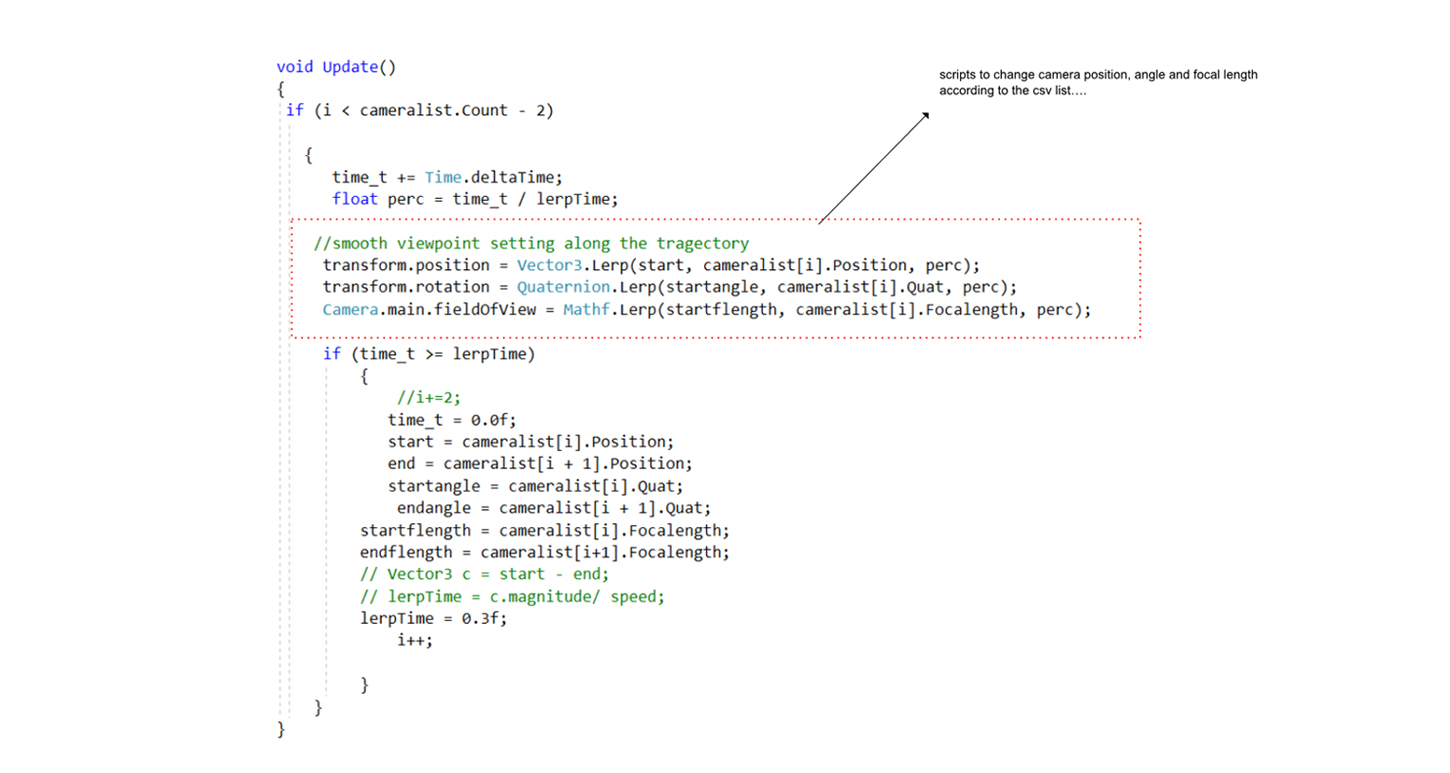

I wrote the C# scripts to process the CSV file, sort it, store information about the camera,

and reuse that information to dynamically change the Main Camera position, angle and focal length in Unity.

Final Output

The right side unity video was our final output. It basically scans the boxes (i.e. buildings) from the lens of the input film.

How the camera position/angle/focal length shift over time ———— the temporality of the input film with regard to its camera,

has been revitalized in a new context.

Key Technicalities

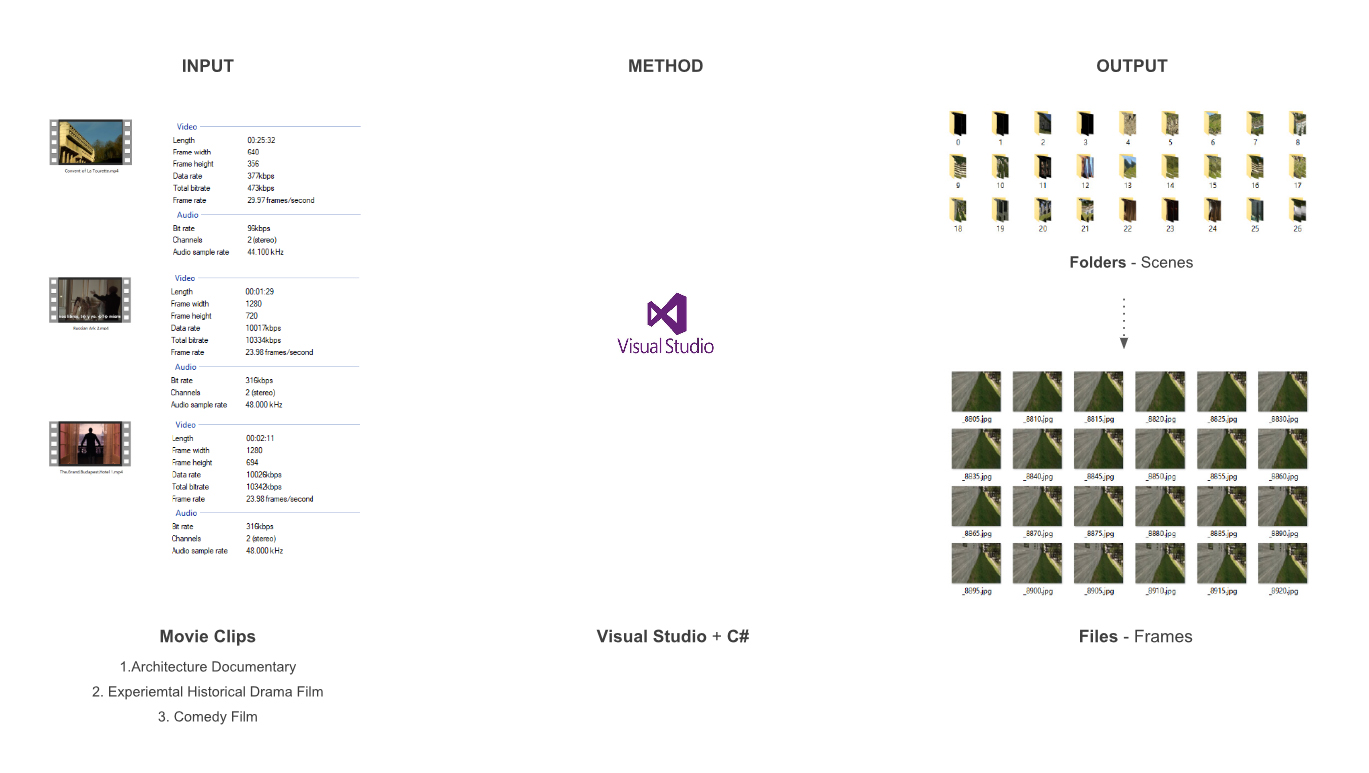

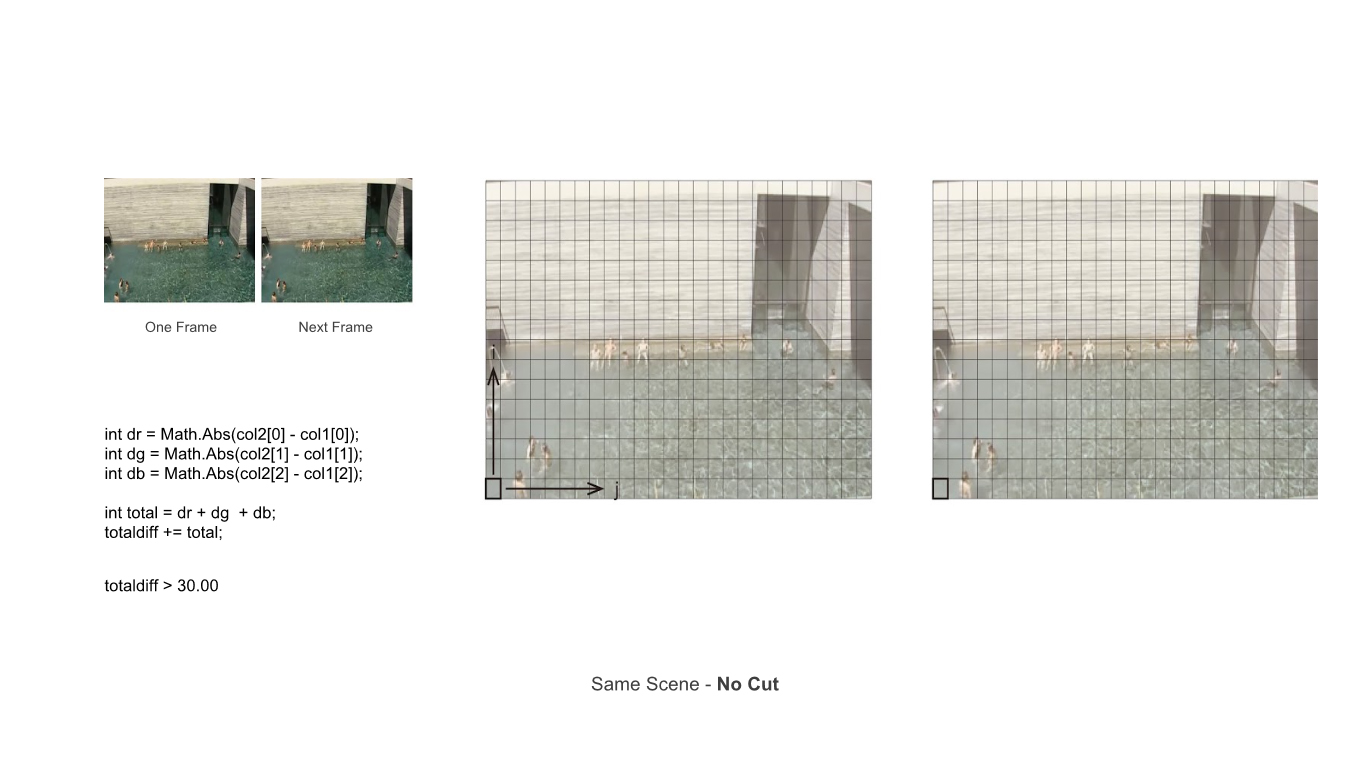

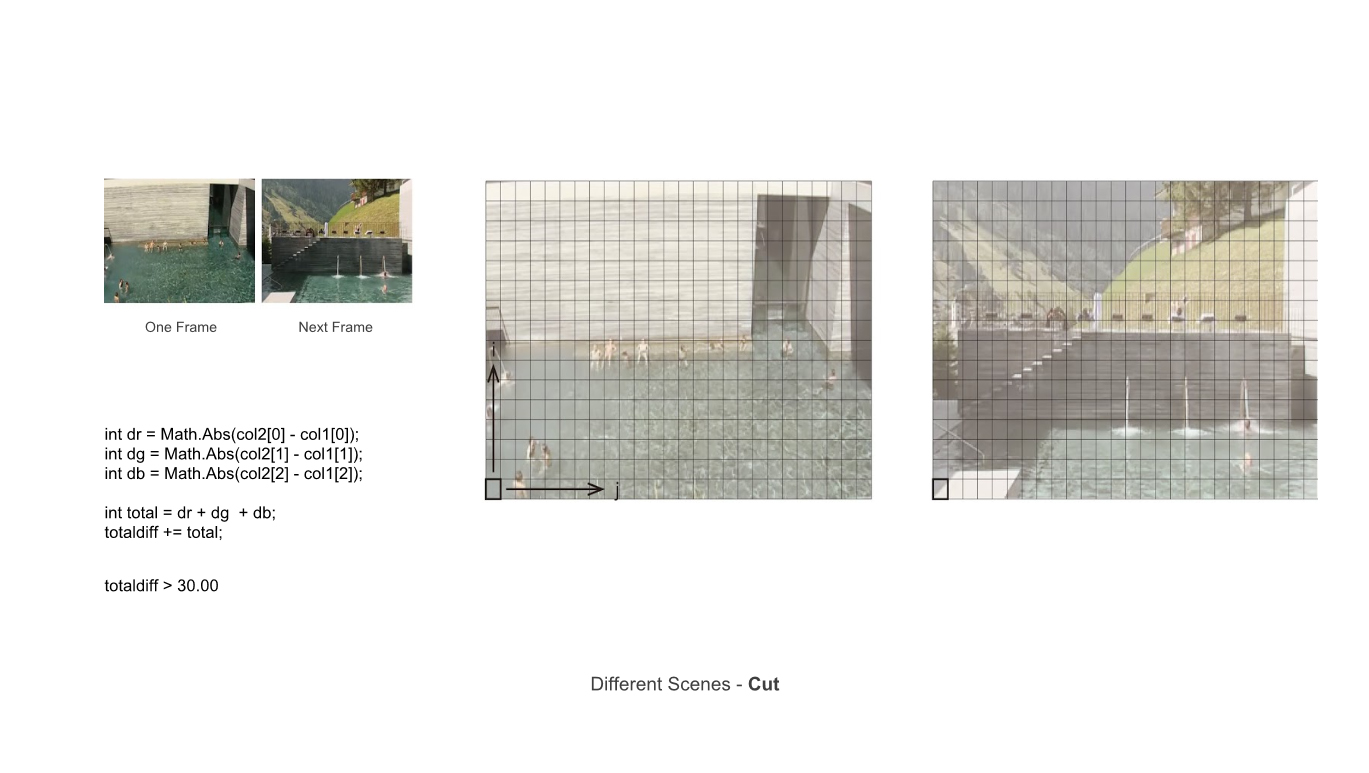

(1) How the shot-cuts are made?

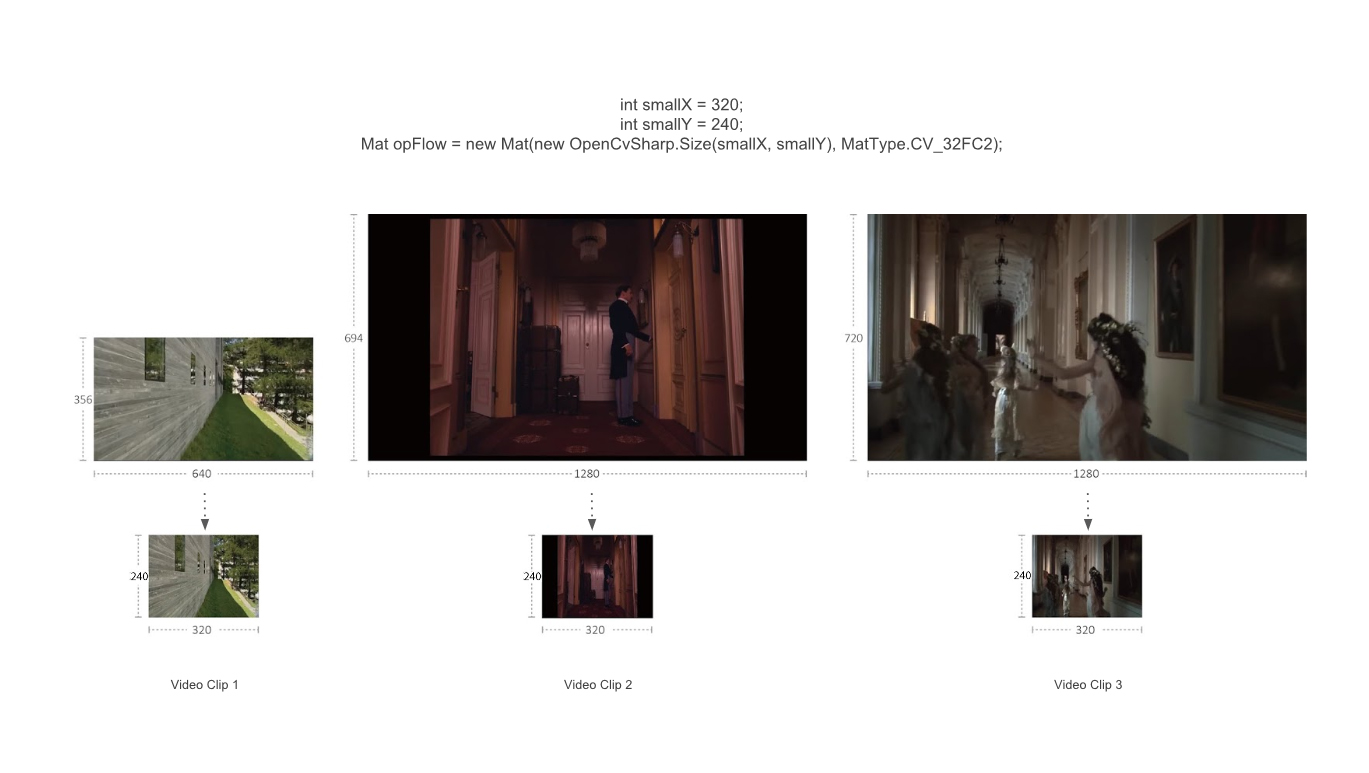

Using openCVsharp, we first generated screenshot images for each frame, and reduced screenshot resolution to 320*240 to enhance efficiency.

Then a program was used to compare the screenshots. Whenever the difference between 2 successive screenshots exceeded a certain threshold (in our case, 30.00), that would be interpreted as a shot-cut, and the screenshots of each continuous shot would be stored into a separate folder.

We tested the program with 3 types of movie input, architectural documentary, experimental historical drama, as well as comedy film. Our subject movie, Peter Zumthor The Thermae of Stone (25’32’’) was divided into 97 shots by the program.

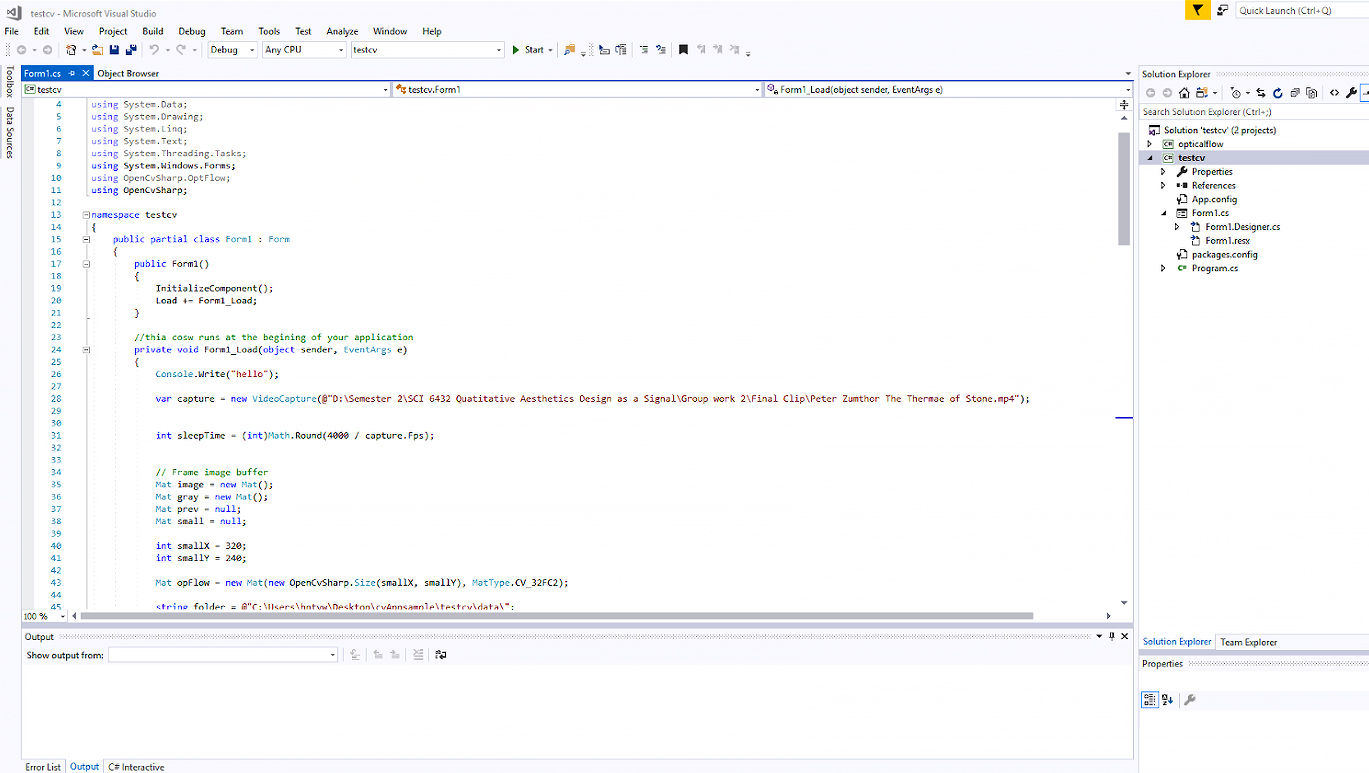

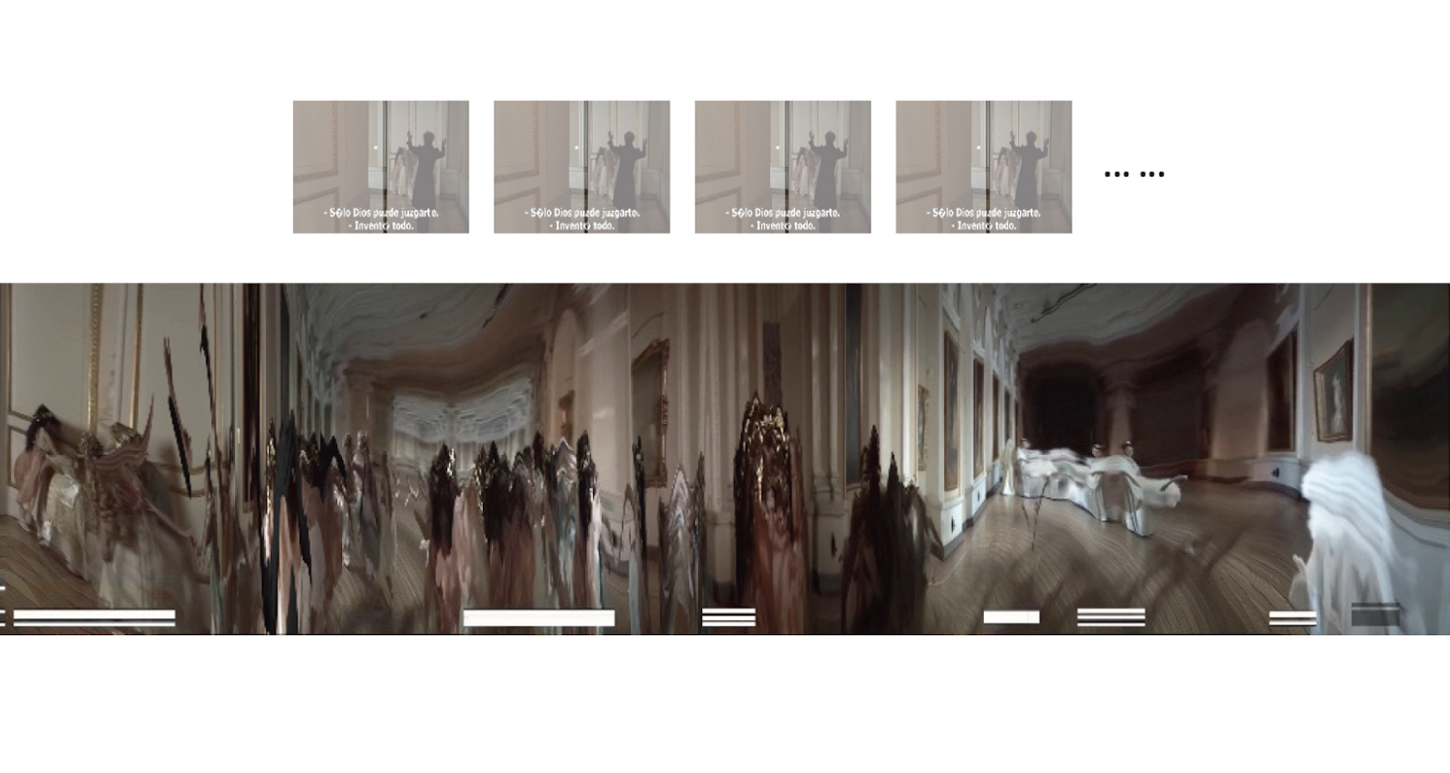

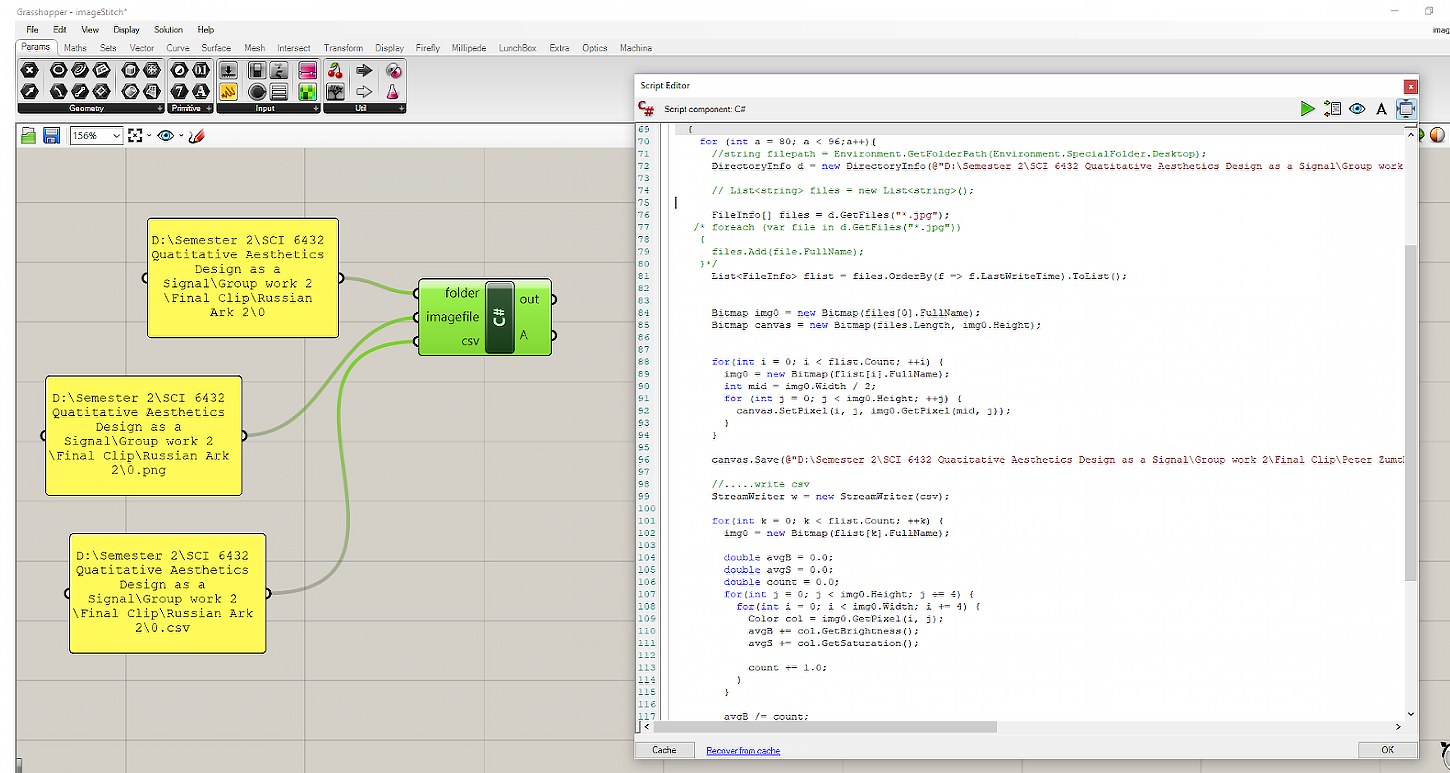

(2) How to produce Static Diagram

for individual shot?

Rhinoceros, Grasshopper, and C# were used to visit each screenshot in a folder, cut a 1-pixel-wide section at its center and place the sections chronologically next to one another.

Thereby, we got a miniature of each shot revealing its temporality. It is easy to tell which shot is still, which has camera movement in it and which entails both camera and human motion.

(3) How to Interpret camera info for each individual shot?

We selected Shot 16th- 19th as target group to derive temporality from. The sequence has 2 static shots and 2 moving shots.

The movement and staticity within each shot, as well as their chronological composition as an entity, is the focus of our study.

VisualSFM was used to decipher the camera in each shot. It was able to run feature matches between various viewpoints to interpret the camera position, angle, and focal length in each frame, then output the 3D camera point clouds and the csv files.

(4) How to transfer camera-temporality

to a new context?

C# script was used to process the CSV list, sort it in temporal order, and store the focal length, Quaternion angle, and position of each camera into a class called “Mycamera”.

For moving shots 17th and 18th, the Unity update() function dynamically assigned the Main Camera its position, angle and focal length according the Mycamera list.

For static shots 16th and 19th, we placed the Main Camera manually for the right duration. The two parts were compiled for continuous camera mapping from input film to unity.

Future Work

VR Experience: extract the spacial sequence in a film (how the camera moves across space) for viewers to experience

Other Film Inputs: try applying the same camera motion sequence from an outstanding film to an architectural design presentation.

Lighting: reproduce lighting temporal qualities from films

4. Adaption: enable adaptation of temporal qualities in the reconstruction phase instead of complete replication.

Download The Paper: Temporality Transfer Between Media