Dream by Day XR

Exploring Tangible Input for VR Interactive Storytelling

Dream by Day is a VR experience that allows participants to alter the virtual world when interacting with tangible objects.

It explores how tangible interaction can enhance players’ sense of presence in VR.

It asks the questions of: How could we design tangible objects for a meaningful VR experience?

How could the tangible objects afford interaction behaviors? And how could that relate to the overall experience and story?

(Please unmute the video for better viewing experience.)

Independent Work at MIT Media Lab | 2019 Adviser: Sandra Rodriguez Skills: VR Experience Design, VR Drawing, Unity Development, Sound Design

Device: HTC Vive, Vive Tracker, Leap Motion

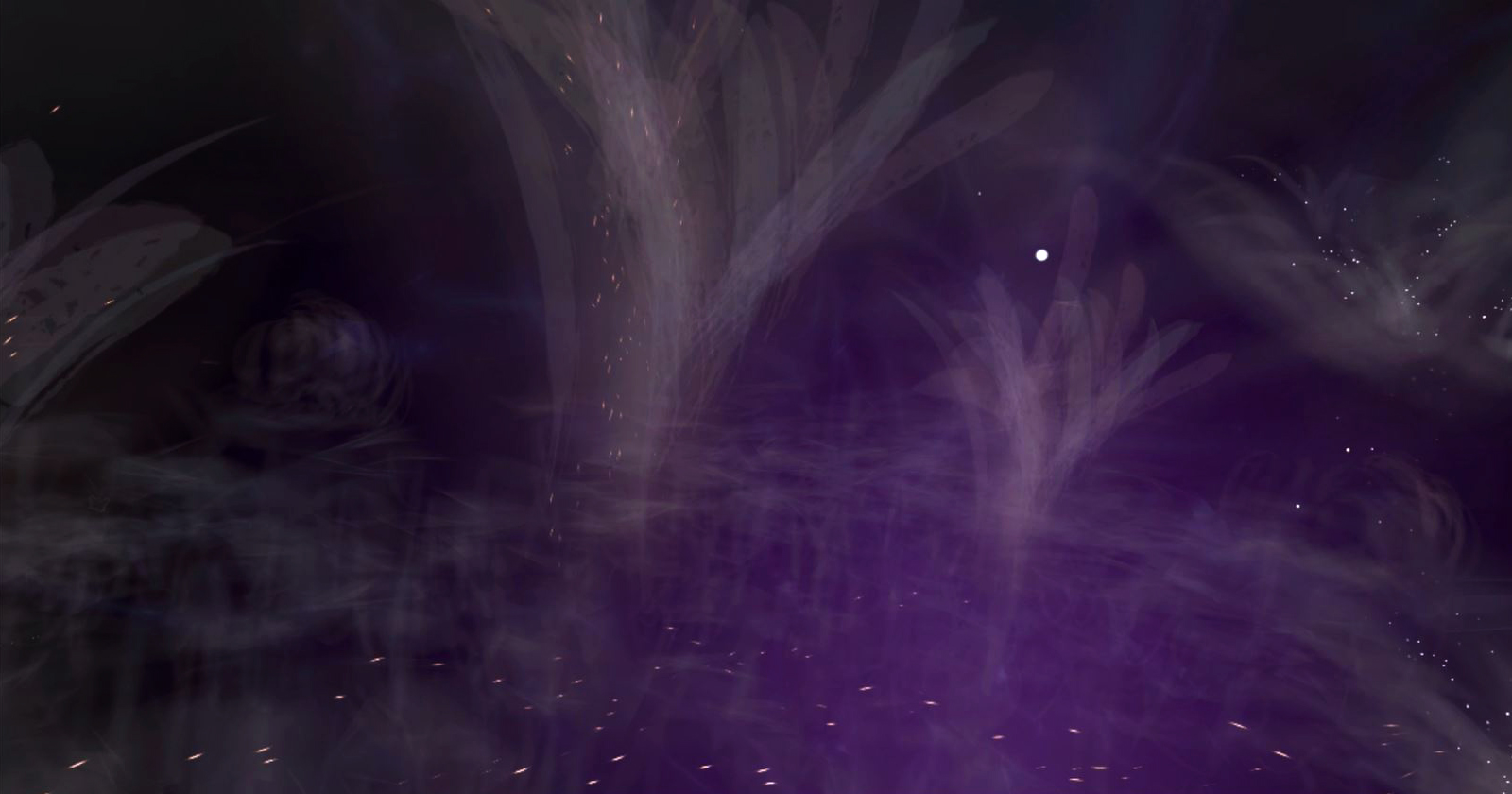

The Experience

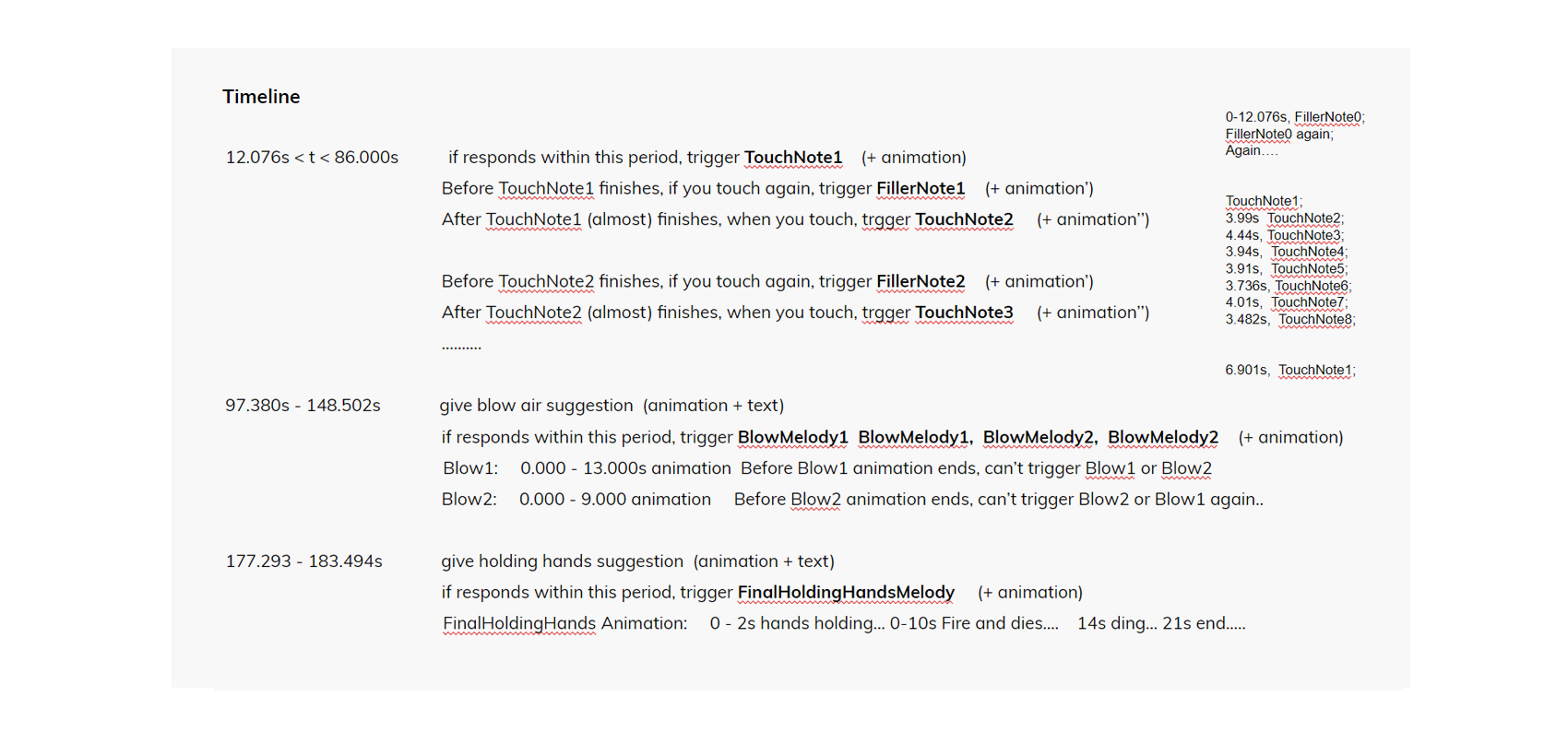

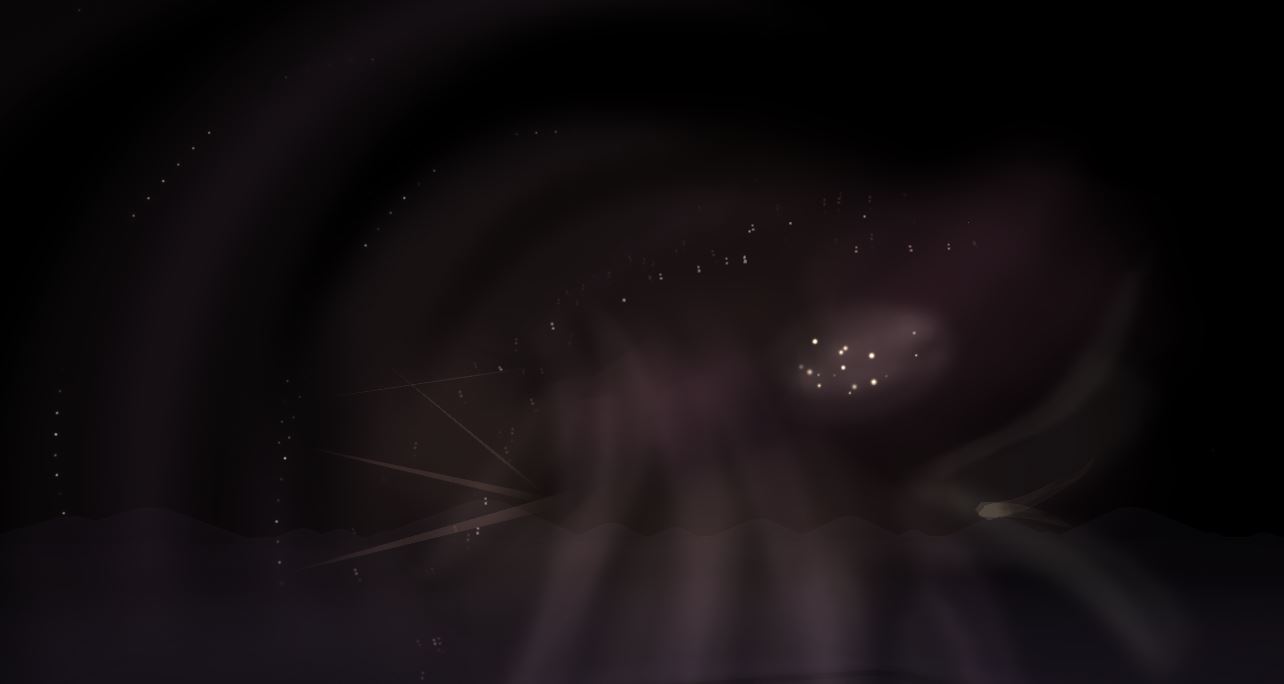

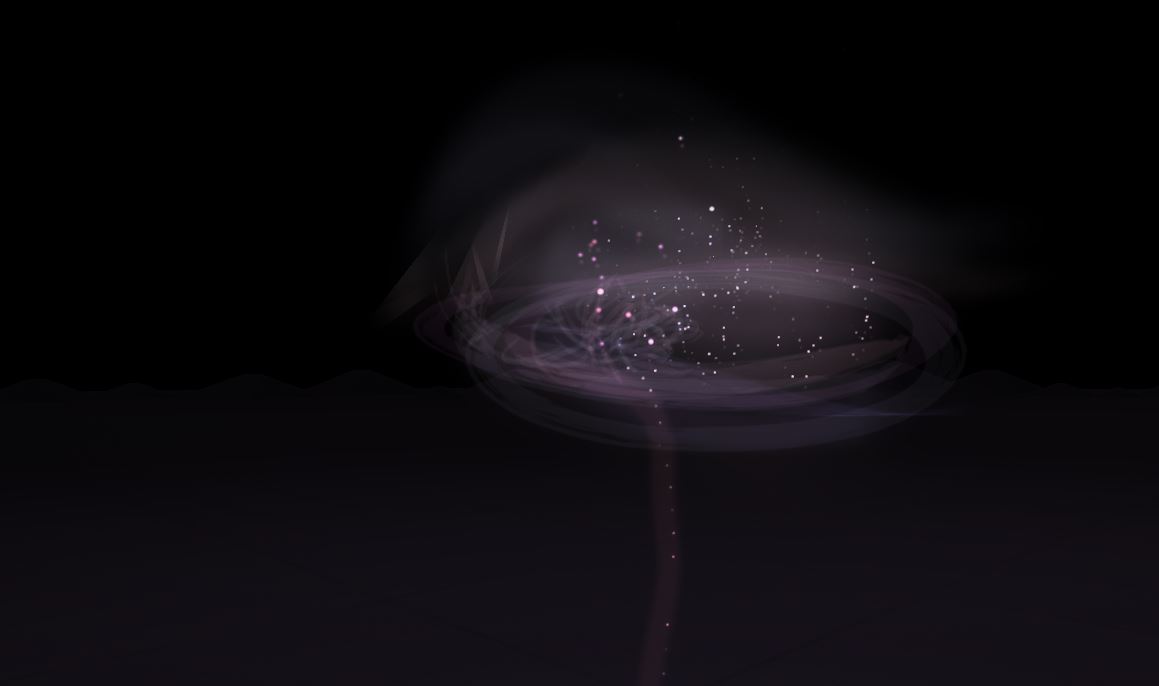

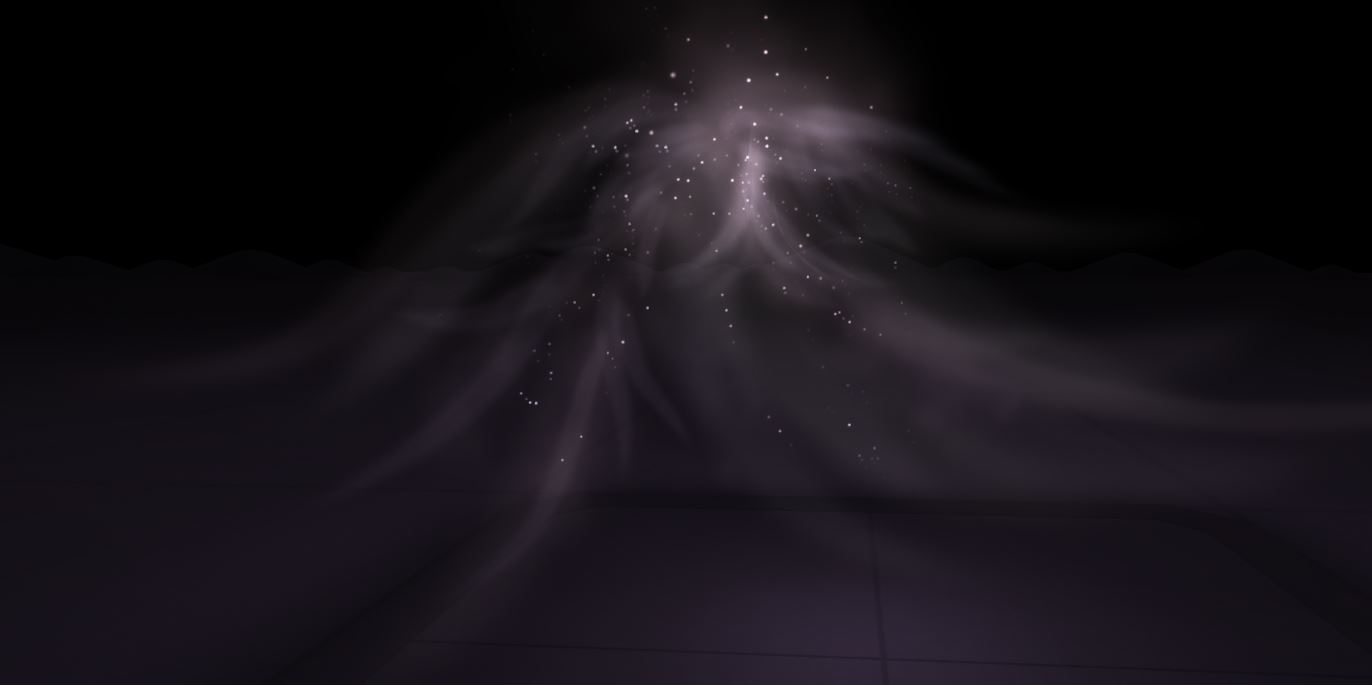

Dream by Day is a journey from reality into your dream. It all starts with tapping a glass ball, namely the “Dream Ball”.

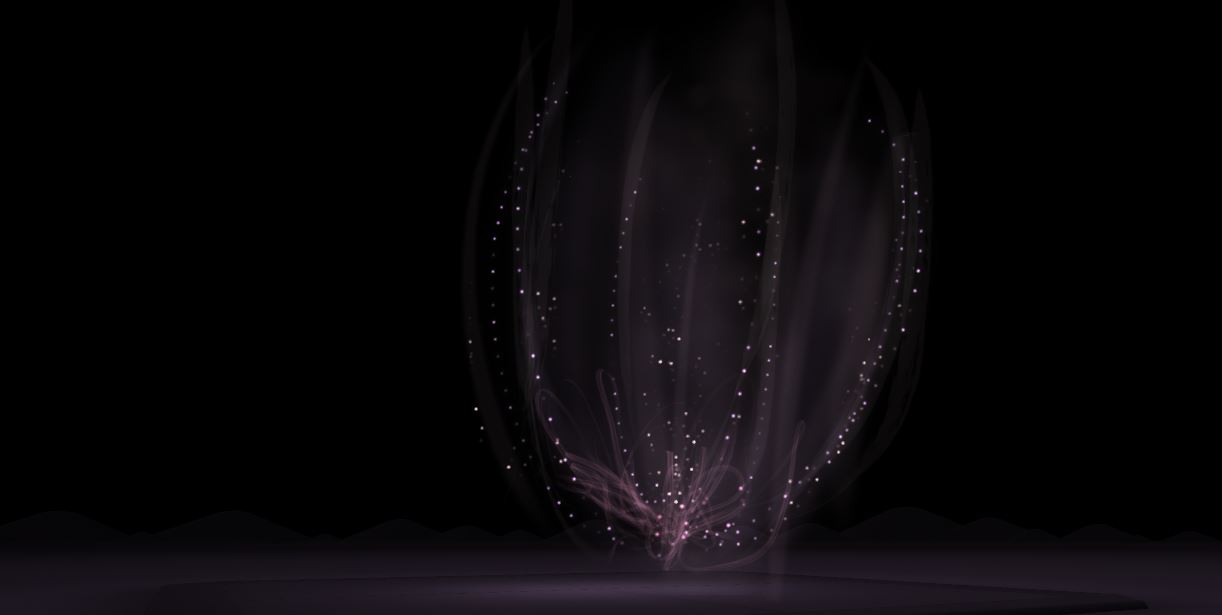

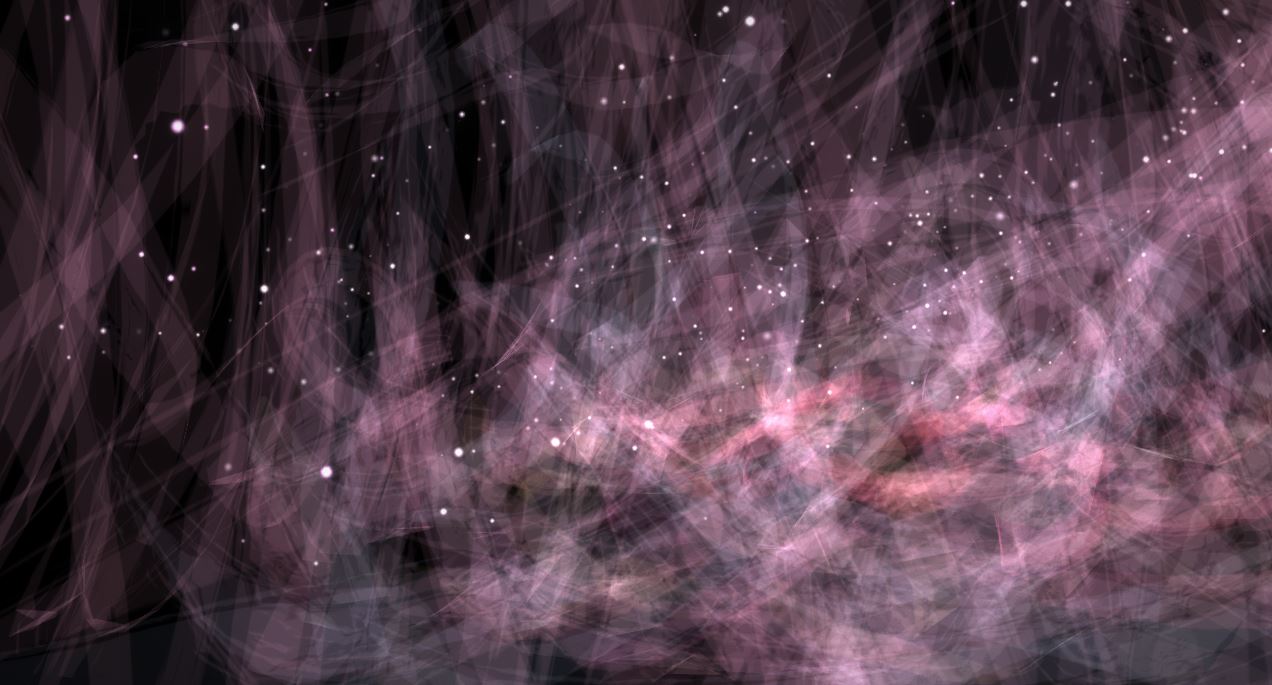

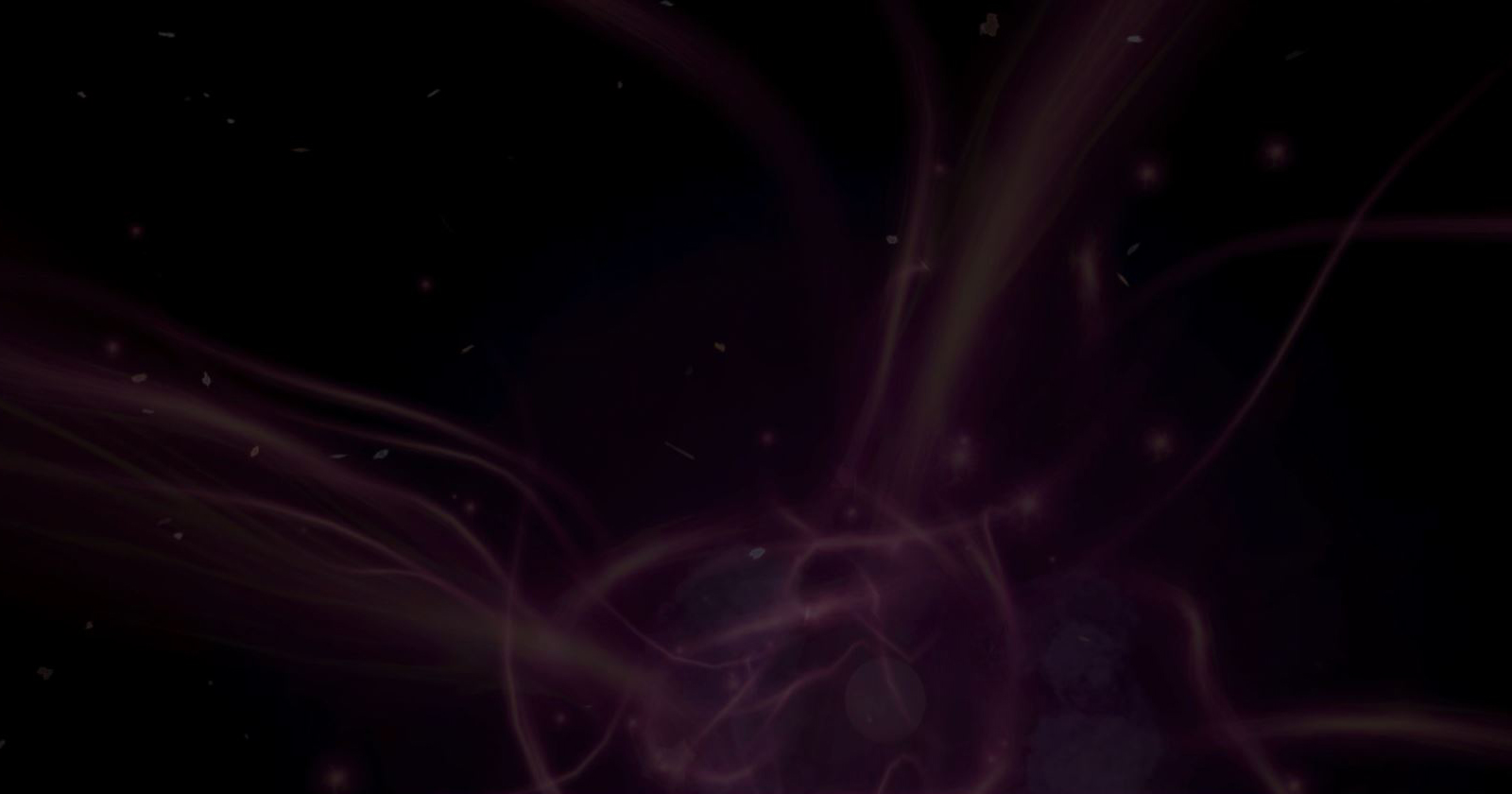

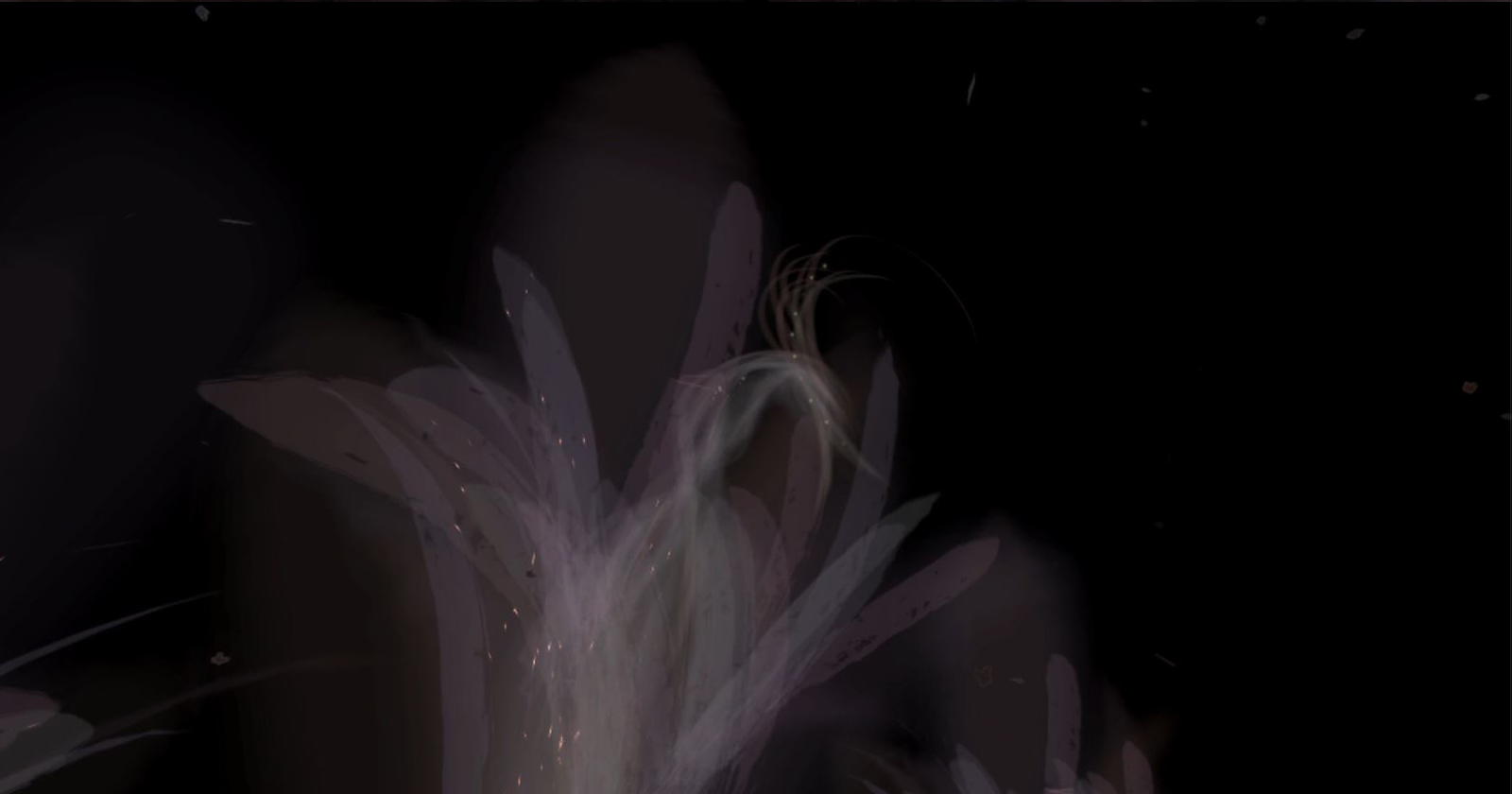

When you touch the Dream Ball, things would start leaking out of it, revealing an ephemeral wonderland full of strange fauna and flora.

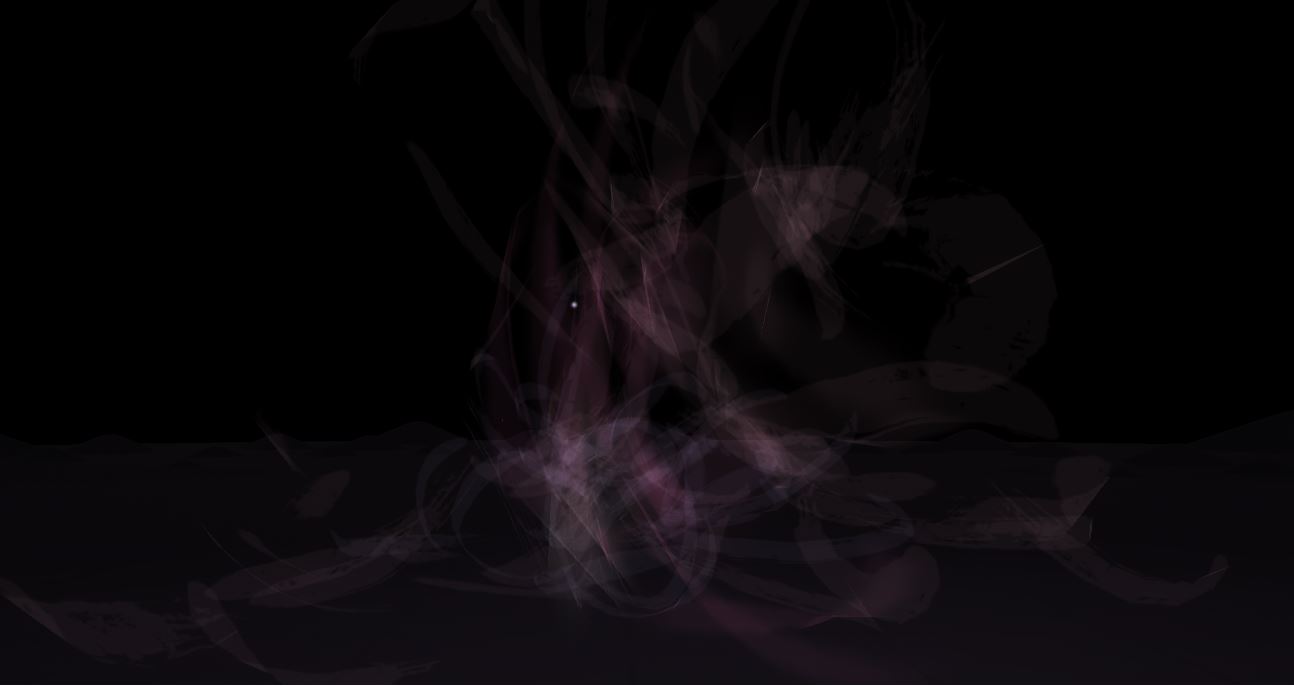

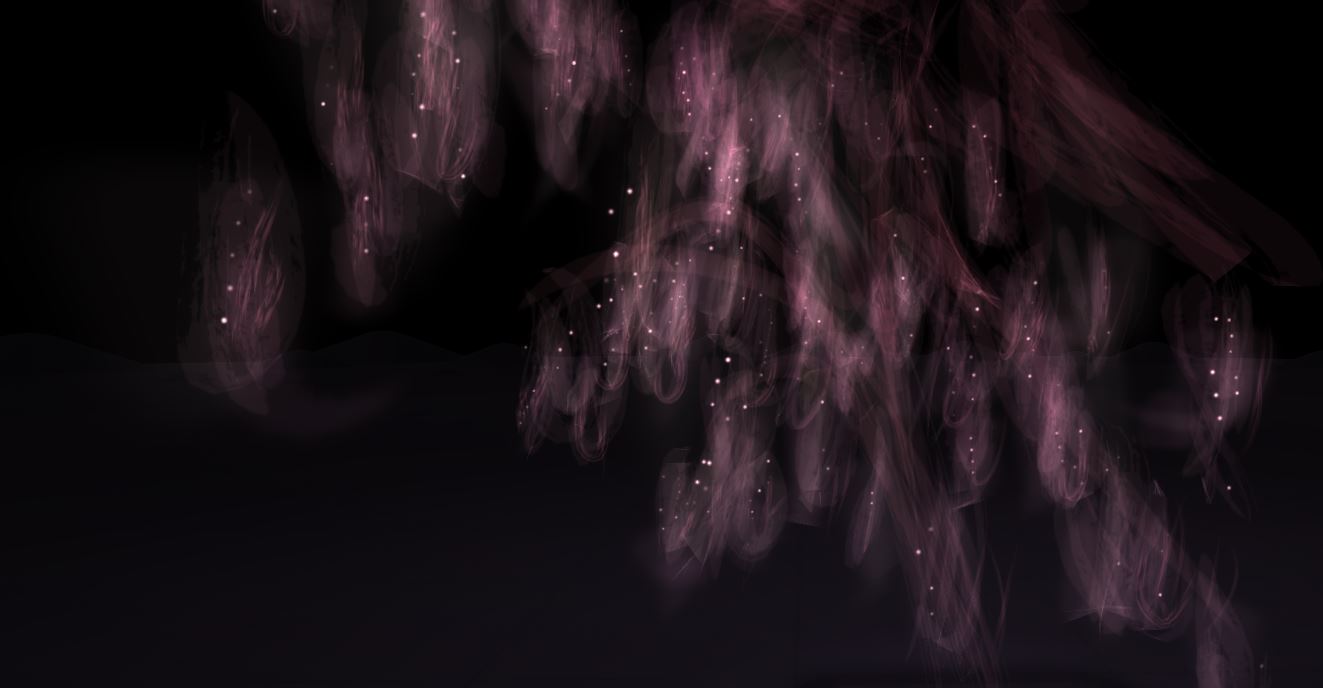

Each subsequent touch would add new creatures and unique melody to the dream land.

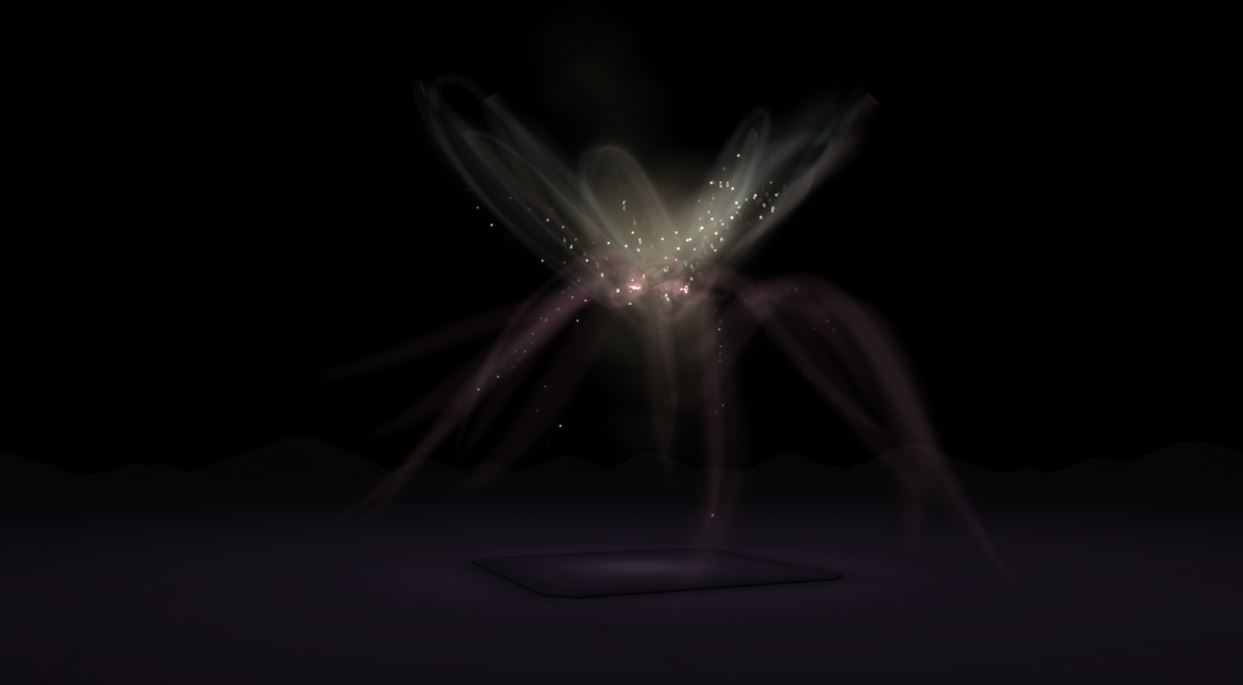

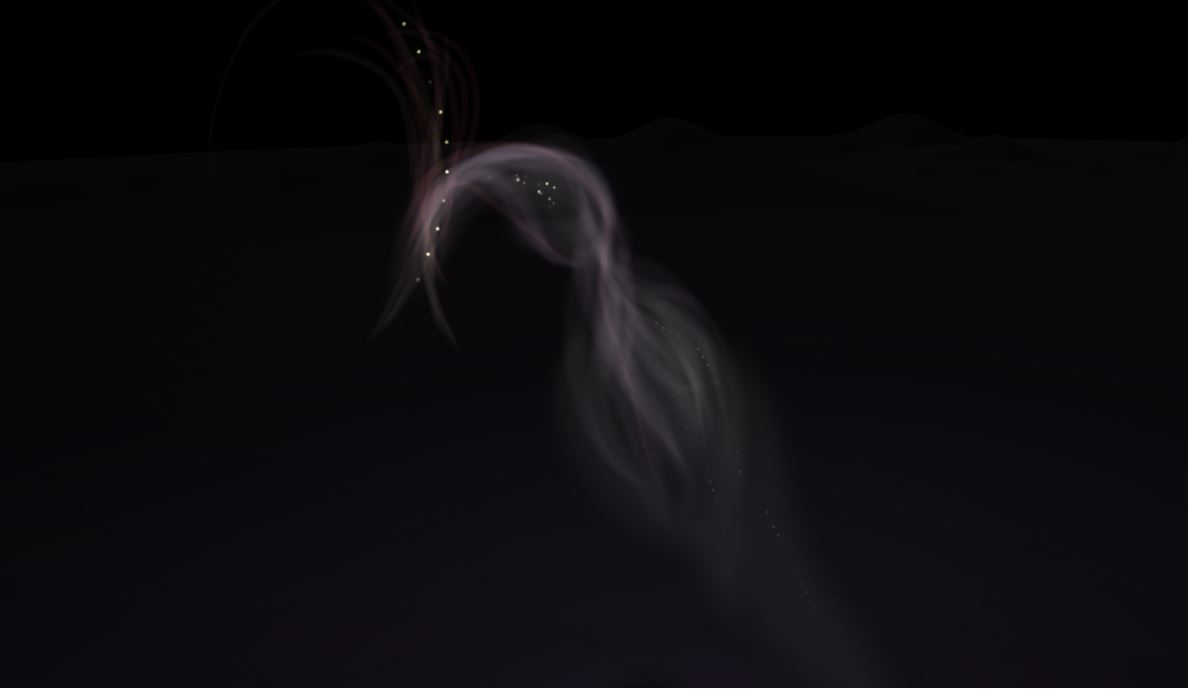

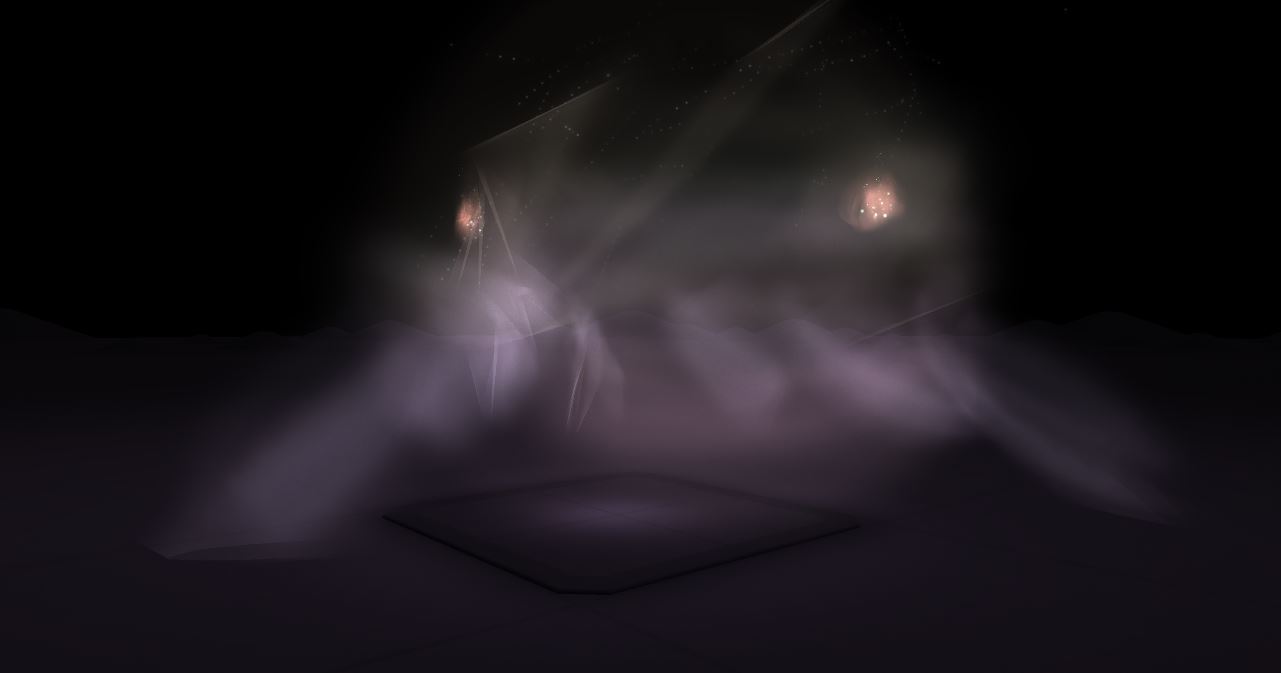

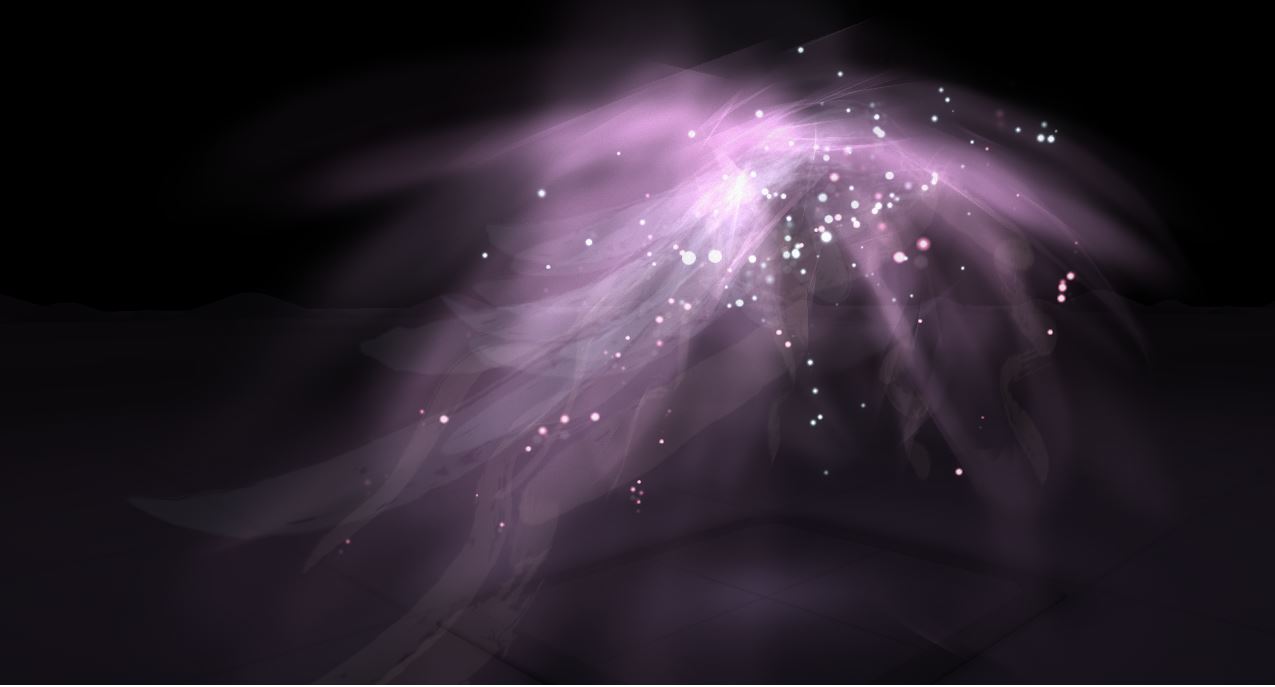

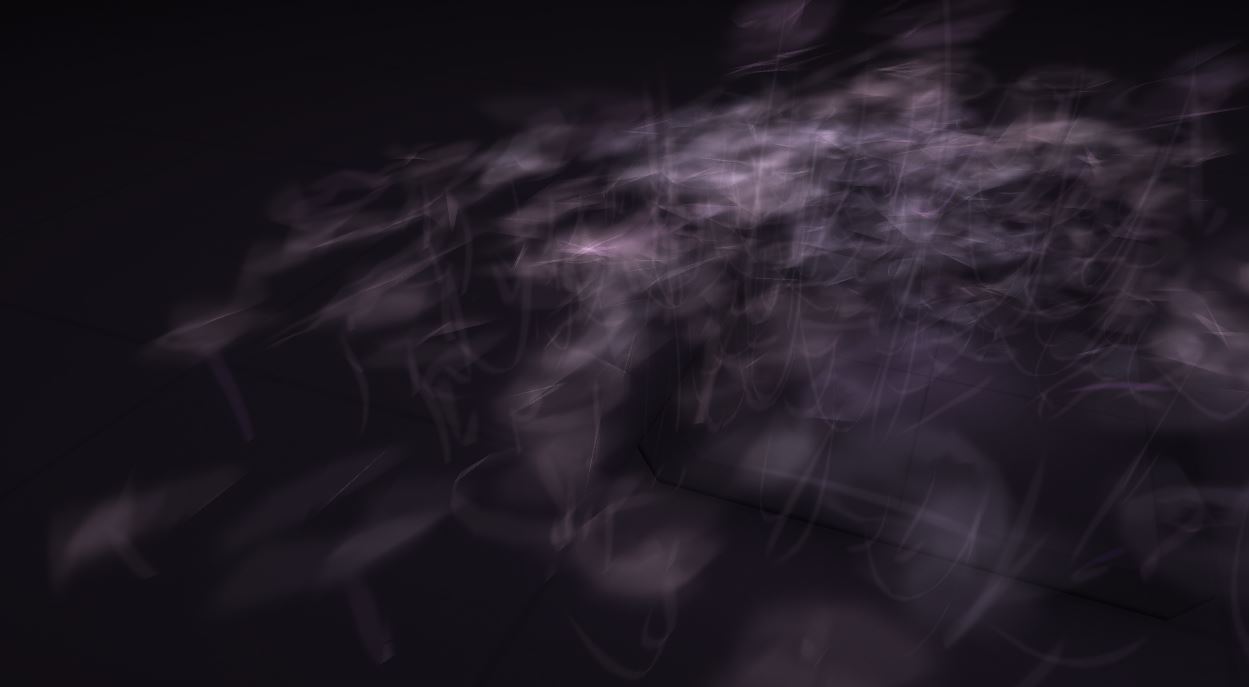

After your dream is fully loaded, you’ll blow air onto the Dream Ball to trigger waves + fogs, corresponding to your breathing pattern.

Finally, when your breath ceases down, you’ll hold the Dream Ball with both hands to wind up the experience (in fire).

The Interaction

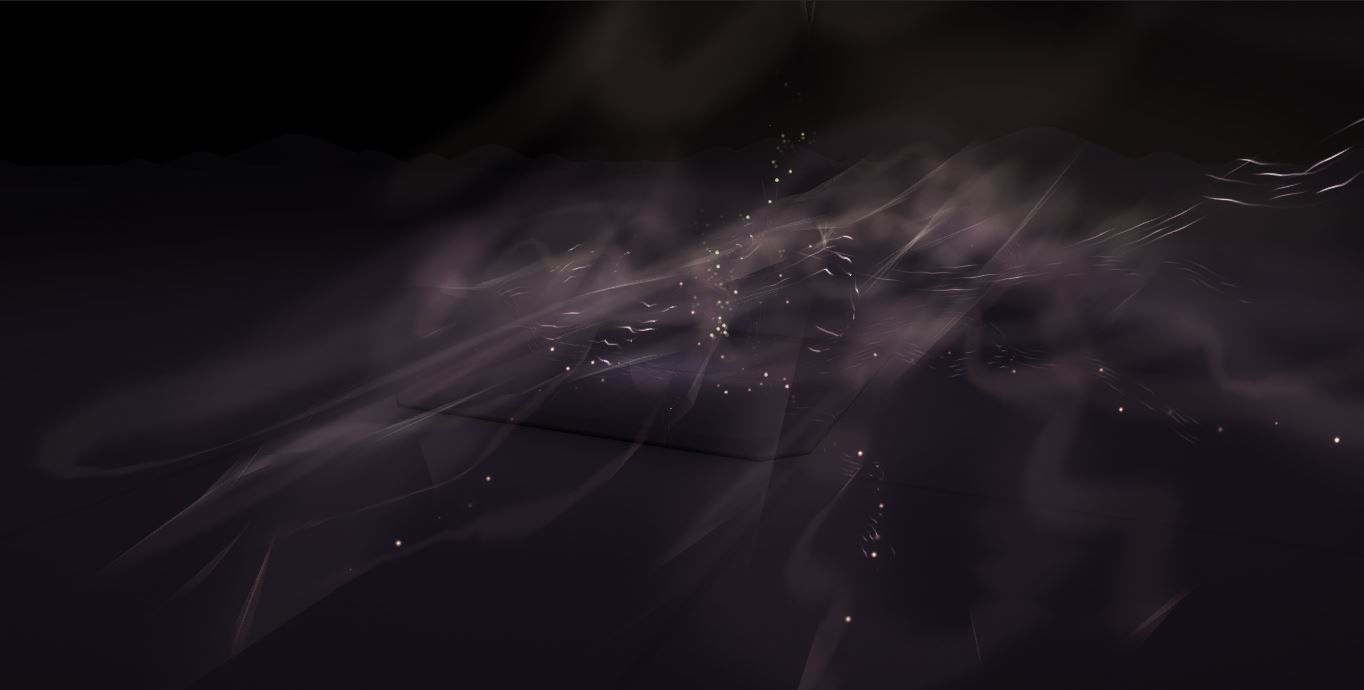

There are 3 types of interaction. “Touch”, “Blow Air”, as well as “Hold With Both Hands”.

Each triggers different behaviors and visuals in the scene to build up different moods or push the story into various stages.

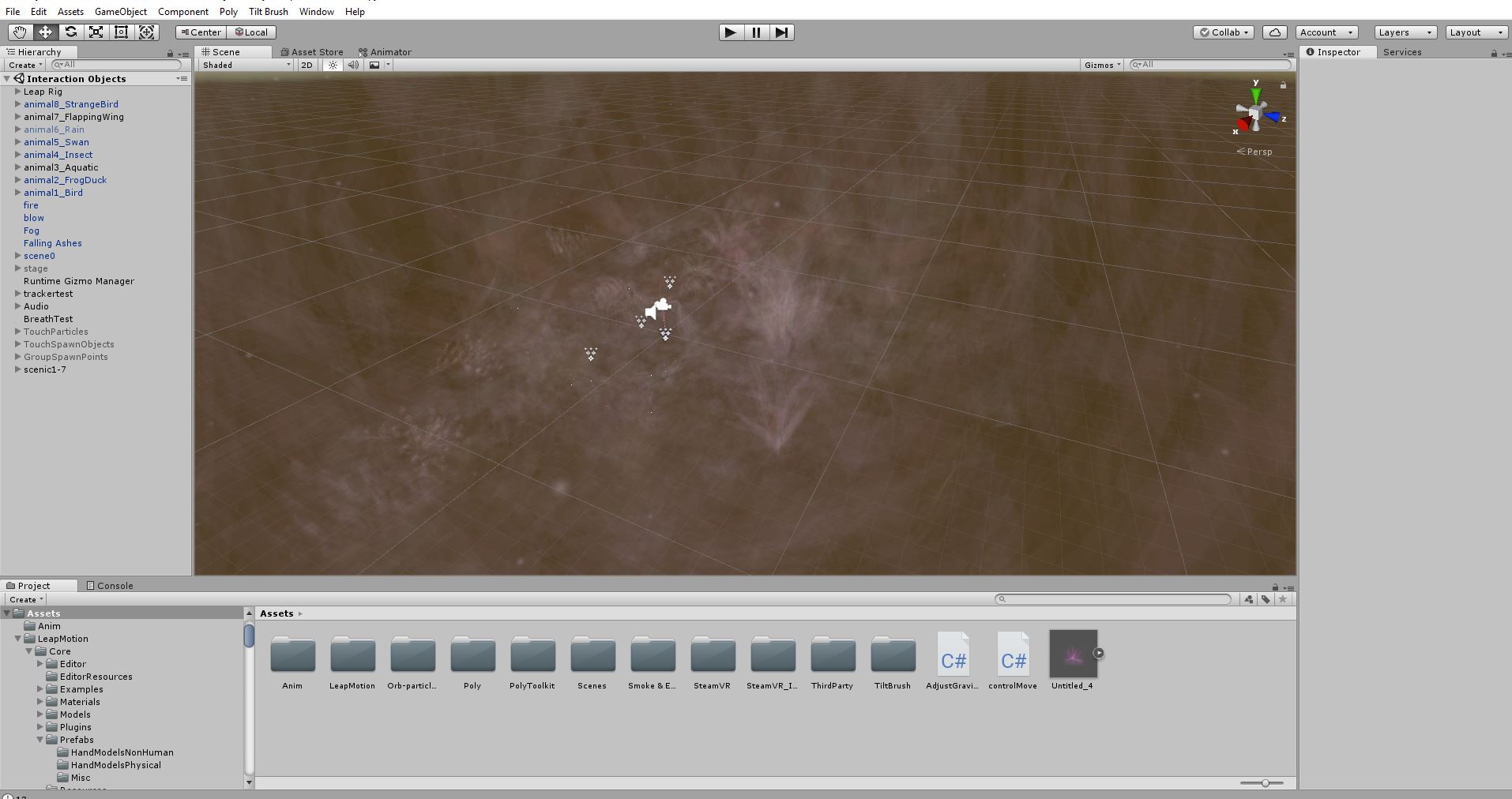

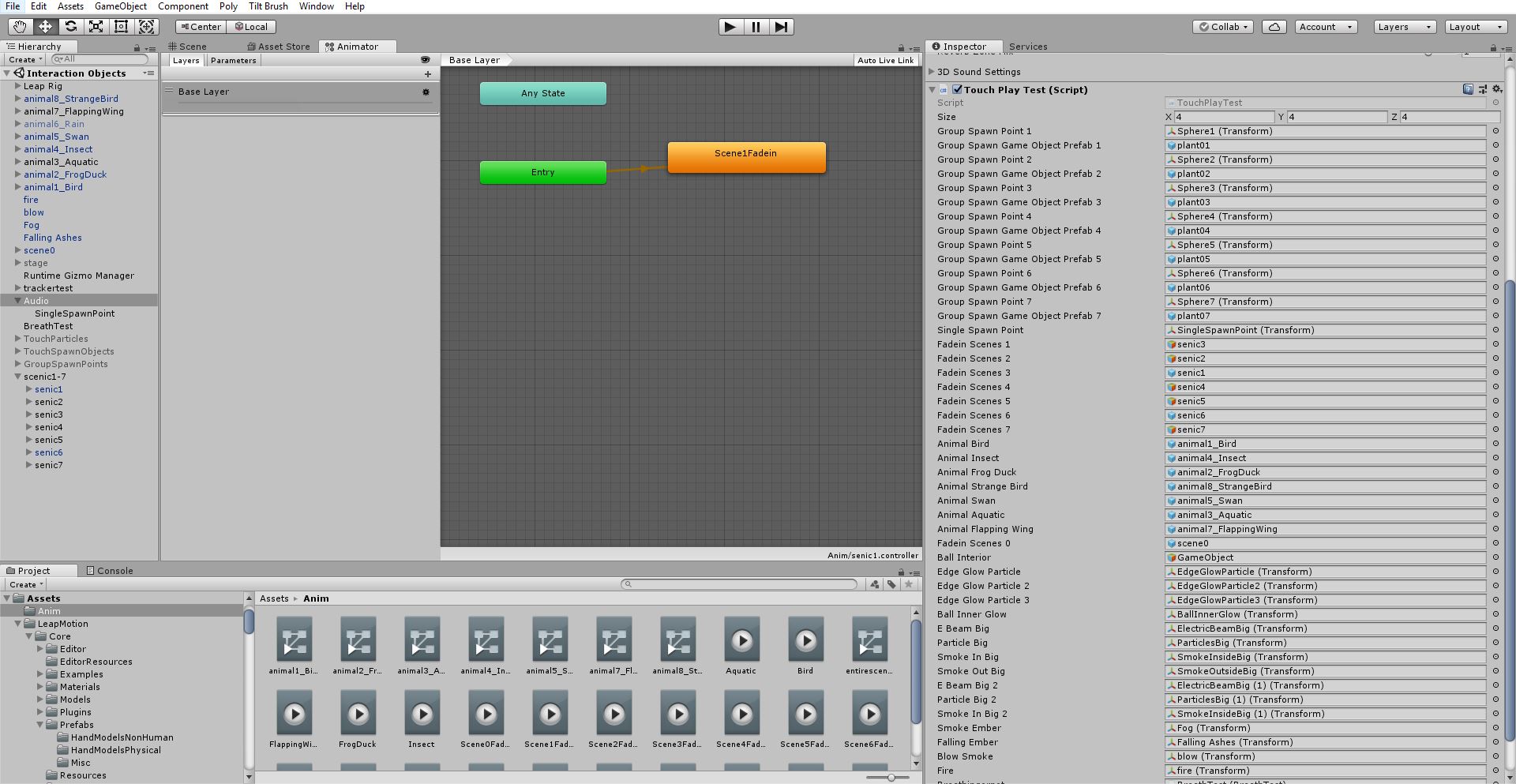

Technical Solution

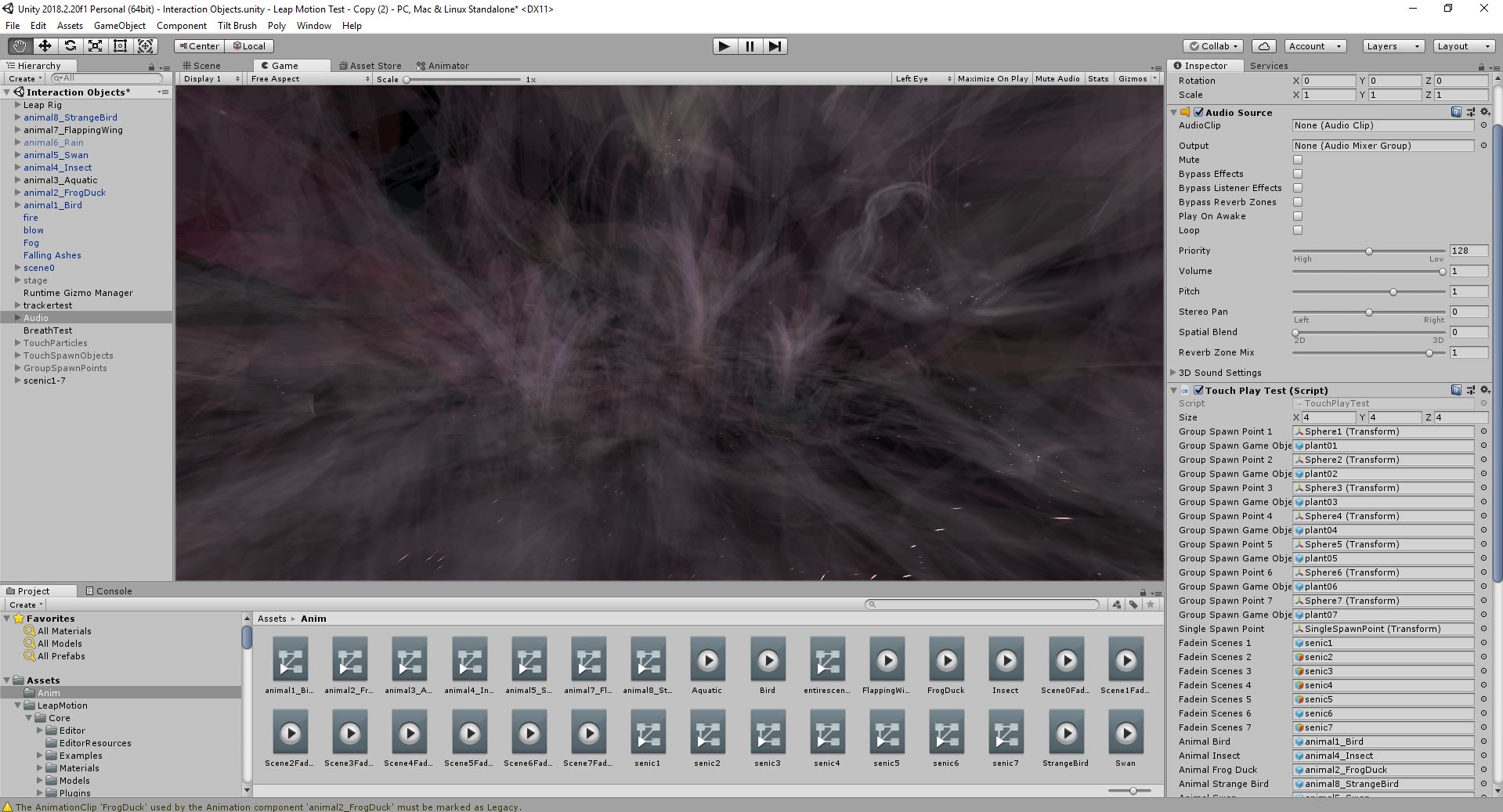

Leap Motion is used to capture hand movement. Meanwhile, HTC Vive tracker

registers the real-time location and speed of the ball. Thereby, both hand and object data

can be mapped into VR for the Leap Motion interaction manager to determine different interaction behaviors.

Design Process

The process of designing “Dream by Day” was a rather iterative and lateral one.

Initially, what I had in mind was a dream ball, a vague story as well as some interactions around that.

Then I started editing music and sound for interactions and for the environment.

It turned out that music was an effective means to judge whether different scenes, objects, and interactions could fit together.

And that led to several rounds of refining the original story and finalizing the scenes, objects, and their behaviors.

Simultaneously, I built technical MVPs to prove that tangible interaction in VR could work using Vive Tracker and Leap Motion.

What followed was asset making with Tiltbrush, and synthesizing the whole VR experience in Unity plus user testing.

Each of these chapters mutually inform each other and they gradually culminate as the deadline come close :)

Moodboard

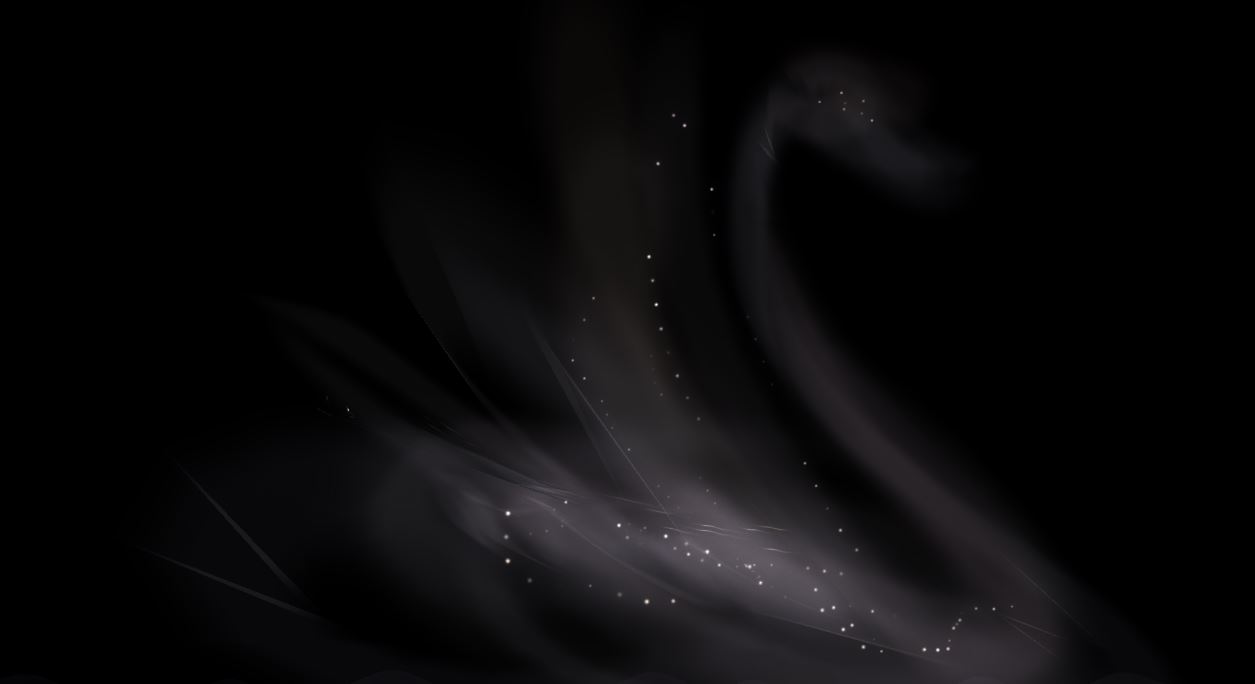

I was influenced by works of Brett Whiteley and William Blake (plus some Kate Bush album covers).

The aesthetic goal is to portray a poetic, hazy dream world where dimensions dissolve and objects intertwine.

Tiltbrush Drawings

The fauna, flora, and the scene itself was drawn in Tiltbrush, leveraging its soft highlighter and velvet ink brushes.

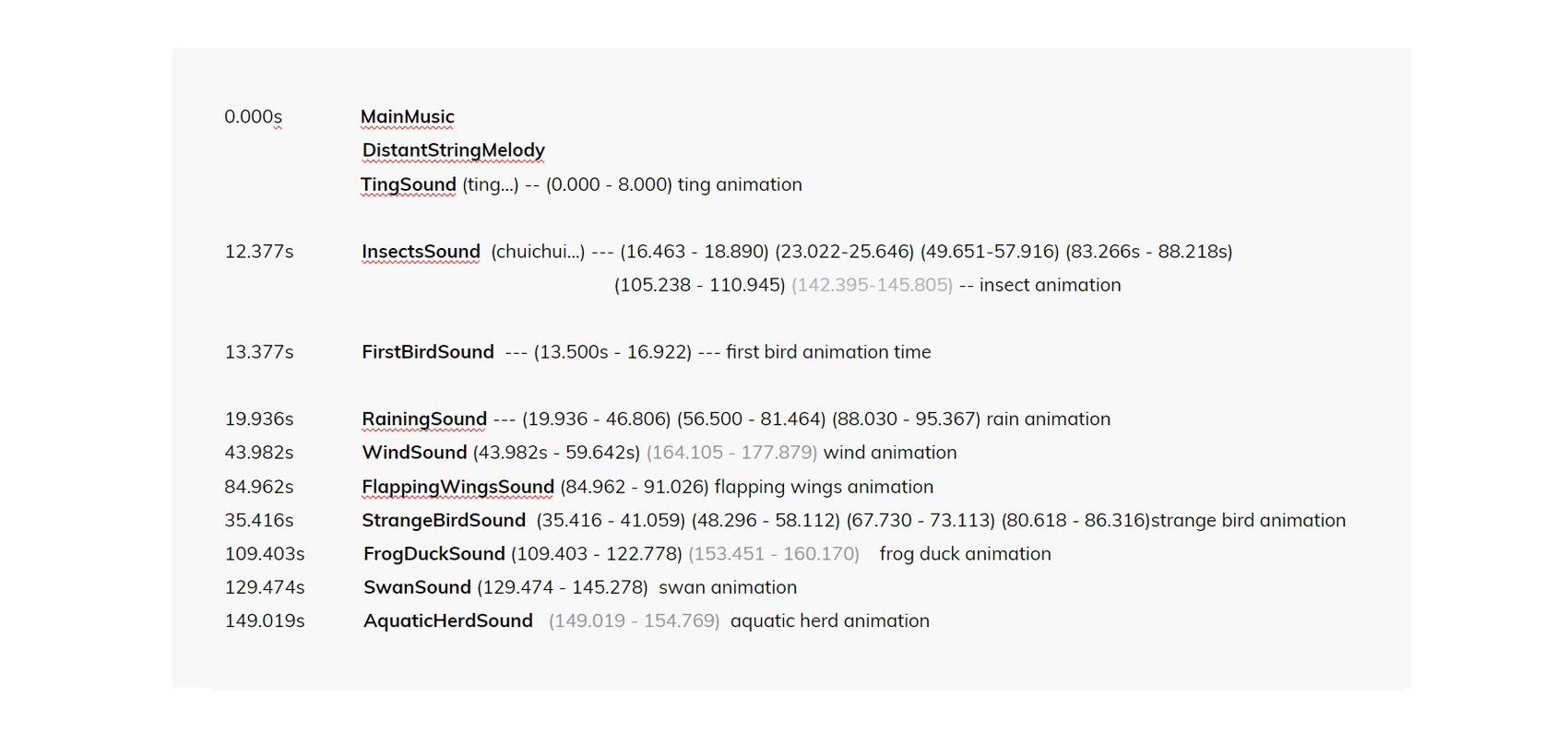

Sound Design

Sound/Music is paramount to this experience. It helped set the mood, define the look, and the interactive behaviors.

Sound/Music in Dream by Day was divided into 3 categories, “main music”, “environmental sound”, and “interaction sound”.

Specifically, interaction sound has two types, “main interaction sound” as well as “sub-interaction sound”,

which is programmed to accommodate any erroneous interaction of the user,

so as to ensure the overall auditory experience will always be consistent and pleasing even if users go out of their way.

Unity Development

Thanks to the LM Interaction Engine, development was easier than expected. Basically, I used a script to detect when the virtual

hand (captured through Leap Motion) and the virtual ball (represented as a tracked object in VR through Vive Tracker) intersects,

and to trigger various interaction behaviors and effects based upon when that interaction happens in the whole story line.

Screenshots

What I learned

“Start with MVP, then build upon it incrementally.”

“Always prove your workflow before you go invest more time in it.

Especially with new tools, prove the workflow iteratively.”

Future Work

Place the environmental sounds in the scene using spatial audio.

2. Conversational Use education before the main experience.

3. Create more sophisticated animation using AnimVR or C# programming.

4. Replace vive tracker with its miniaturized version.